- Simplifying AI

- Posts

- 🤖 AI Weekly Recap (Week 47)

🤖 AI Weekly Recap (Week 47)

This week’s top AI news, breakthroughs, and game-changing updates

Good morning, AI enthusiast. AI just had another wild week. Google dropped Gemini 3, its most advanced multimodal model yet, built for near-real-time reasoning, deep research, and multi-agent workflows, while OpenAI rolled out Codex-Max, a 24-hour agentic coding model that prunes its own memory and tackles massive projects end-to-end.

Plus: The most important news and breakthroughs in AI this week.

Google has launched Gemini 3, its most powerful AI model to date, arriving just seven months after Gemini 2.5 and intensifying the frontier-model race. With record-breaking scores in reasoning benchmarks and a new agentic coding experience, Gemini 3 positions Google as a top contender against OpenAI and Anthropic.

→ Achieves 37.4 on Humanity’s Last Exam, the highest score ever recorded, surpassing GPT-5 Pro’s 31.64

→ Leads LMArena, the human-led satisfaction benchmark

→ Rolling out “Gemini 3 Deepthink” soon for Google AI Ultra subscribers after additional safety testing

→ Over 650M monthly active users, with 13M developers integrating Gemini into their workflows

→ Ships with Google Antigravity, a multi-pane agentic coding interface combining chat, terminal, and browser windows

🧰 Who is This Useful For:

Developers building intelligent, multi-step coding agents and IDE workflows

Startups exploring high-performance, cost-efficient AI alternatives to GPT-based systems

Researchers evaluating frontier reasoning benchmarks and multi-modal agent behavior

Engineering teams needing integrated AI tools for code generation, debugging, and automation

Try it now → https://gemini.google/

Replit has launched Design Mode, a new Gemini-3 powered feature that lets anyone build complete app interfaces and static websites simply by describing what they want. The update turns Replit into an end-to-end visual design and prototyping tool, no design skills or coding required.

→ Built on Google’s Gemini 3, enabling polished layouts, color systems, typography, and visual hierarchy

→ Generates interactive mockups and static sites in under two minutes from natural-language prompts

→ Supports rapid iteration with conversational edits, no manual tweaking or engineering handoff

→ Can create landing pages, portfolios, and business sites 5× faster than traditional website builders

🧰 Who is This Useful For:

Product managers rapidly prototyping concepts without relying on engineering

Designers creating high-quality mockups and clickable prototypes instantly

Founders spinning up landing pages, portfolios, or business sites in minutes

Try it Now → https://replit.com/

Elon Musk’s xAI has released Grok 4.1, a major upgrade focused on emotional intelligence, creative writing, and factual accuracy. The new model now leads global benchmarks in empathy-driven conversations while drastically reducing hallucinations across real-world tasks.

→ Dominates LMArena’s Text Arena with a 1,483 Elo score, 31 points above the nearest competitor

→ Scores 1,586 on EQ-Bench3, a massive jump from Grok 4’s 1,206, thanks to AI-evaluated reinforcement learning

→ Hallucination rates drop from 12.09% → 4.22%, with biographical errors falling from 9.89% to under 3%

→ Achieves 1,708.6 Elo in creative writing, matching the world’s top narrative-generation models

🧰 Who is This Useful For:

Writers, creators, and storytellers needing emotionally rich, stylistically consistent AI collaboration

Customer-facing teams requiring empathetic and accurate conversational agents

Researchers exploring AI emotional intelligence, reinforcement learning, and behavioral alignment

Users who want a distinctive, personality-driven chatbot with sharper accuracy and lower error rates

Try it Now → https://grok.com/

Google has unveiled Antigravity, an “agent-first” development environment powered by the new Gemini 3 Pro model and designed for a future where multi-agent coding systems work autonomously across tools, browsers, and terminals. The platform emphasizes transparency, verification, and multi-agent orchestration inside a single workspace.

→ Supports multiple autonomous agents with direct access to the editor, terminal, and browser

→ Introduces Artifacts, task lists, plans, screenshots, and browser recordings that verify each step of an agent’s work

→ Offers two modes: Editor View for IDE-style workflows and Manager View for orchestrating multiple agents in parallel

→ Lets users leave contextual comments on Artifacts without interrupting the agent’s flow

🧰 Who is This Useful For:

Developers building agentic coding workflows with browser and system-level tool access

Teams managing complex, multi-agent development pipelines

Engineers needing verifiable, transparent outputs

Companies exploring autonomous software development

Try it Now → https://antigravity.google/

OpenAI has launched GPT-5.1 Codex-Max, a major upgrade to its agentic coding model designed to handle long-running development tasks, massive context windows, and multi-hour build cycles with higher efficiency and reasoning accuracy.

→ Uses a new “compaction” technique to prune session history while preserving context across millions of tokens

→ Outperforms Codex-High and beats Google’s Gemini 3 Pro on advanced coding and reasoning benchmarks

→ Uses 30% fewer tokens while running faster due to optimized reasoning and execution flow

→ Capable of maintaining continuous 24+ hour development sessions without losing context

🧰 Who is This Useful For:

Developers building large, multi-hour or multi-file codebases with AI agents

Teams running continuous integration, debugging, and long-form development workflows

Startups seeking faster, cheaper agentic coding systems

Researchers exploring persistent-context AI models

Try it Now → https://openai.com/index/gpt-5-1-codex-max/

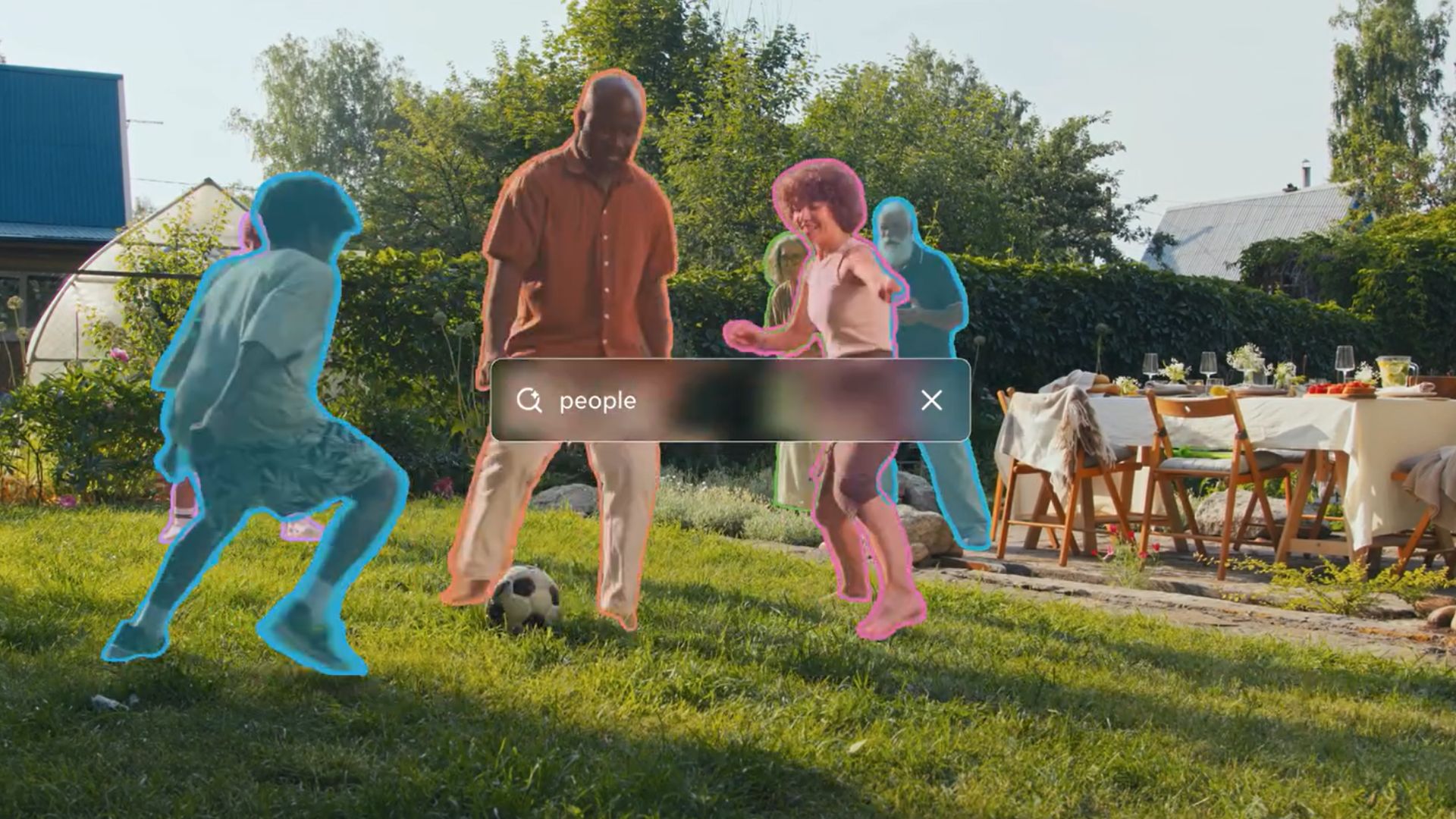

Meta has released SAM 3, the third-generation Segment Anything Model, designed to blur the line between language and vision. Unlike traditional segmentation models restricted to fixed labels, SAM 3 uses an open vocabulary and a hybrid human–AI training pipeline to identify and segment concepts across both images and videos.

→ Supports text prompts, exemplar images, and visual prompts to isolate any concept

→ “Promptable Concept Segmentation” finds every instance of a noun phrase like “striped red umbrella”

→ Outperforms GLEE, OWLv2, and even Gemini 2.5 Pro on Meta’s new SA-Co benchmark

→ Trained via a hybrid data engine mixing AI-generated masks with human verification, producing 4M+ unique concepts

🧰 Who is This Useful For:

Creators and editors applying effects to specific objects or people in images and videos

Developers building open-vocabulary vision systems for real-world applications

Researchers studying the intersection of language, segmentation, and concept understanding

Businesses seeking near real-time, large-scale image and video segmentation for automation

Try it Now → https://ai.meta.com/sam3/

ElevenLabs has unveiled Image & Video, a major expansion that transforms the company from a voice-first tool into a complete multimedia creation platform. The new system unifies image generation, video animation, voice synthesis, music creation, and sound design into a single production workflow.

→ Generates visuals, animates sequences, and adds voiceovers, music, and sound effects in one integrated timeline

→ Supports vertical + horizontal formats for TikTok, Reels, and YouTube-ready outputs

→ Includes a commercially-safe library of licensed voices and music for instant ad-grade use

→ Delivers multilingual dubbing with one-click voice replacement across any language

🧰 Who is This Useful For:

Creators producing social-first videos without juggling multiple apps

Marketing teams building fast, localized ad variations at scale

Agencies needing cinematic visuals paired with natural-sounding narration

Businesses seeking end-to-end AI content creation inside one platform

Try it Now → https://elevenlabs.io/studio

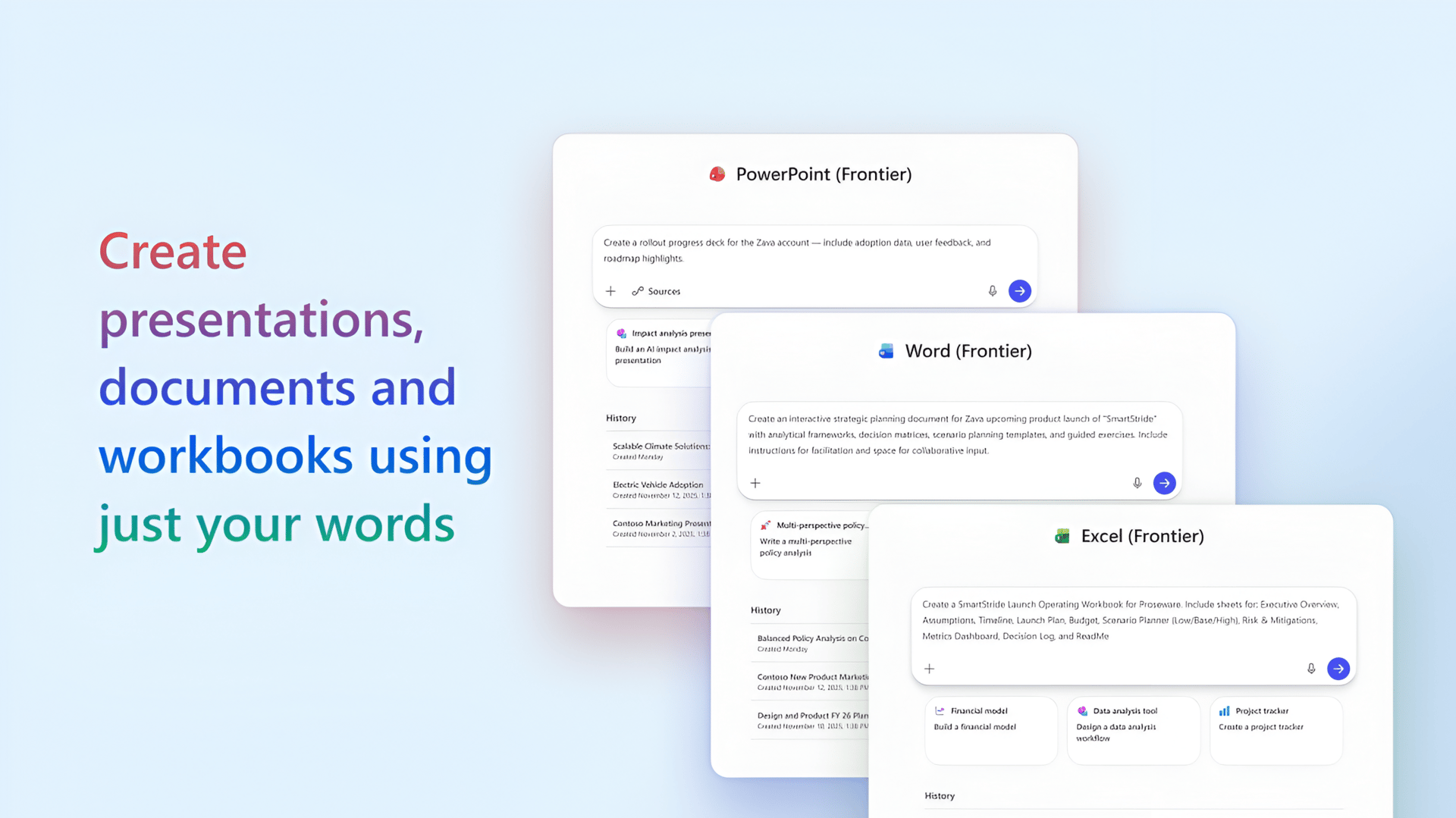

At Ignite 2025, Microsoft introduced dedicated AI agents for Word, Excel, and PowerPoint inside Microsoft 365 Copilot, enabling users to generate full documents, spreadsheets, and presentations directly from natural language prompts.

→ Creates Word docs, Excel sheets, and multi-slide decks from chat instructions

→ Agents ask clarifying questions and refine drafts using advanced reasoning

→ “Agent Mode” allows direct edits inside Office apps with full feature support

→ Pulls enterprise context like emails, notes, and data (with permissions)

→ Works inside a secure sandbox with no open internet access

→ Outputs maintain themes, formulas, layouts, and sensitivity labels

🧰 Who is This Useful For:

Knowledge workers automating document creation and reporting workflows

Teams generating presentations, proposals, and strategies at scale

Analysts using Excel for multi-step data tasks powered by AI agents

Enterprises looking for secure, AI-driven productivity tools inside Microsoft 365

Try it Now → https://m365.cloud.microsoft/

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better! See you tomorrow.

- Dr. Alvaro Cintas