- Simplifying AI

- Posts

- 🧑💻 Claude Code sparks “Selfware” fears

🧑💻 Claude Code sparks “Selfware” fears

PLUS: How to run Claude code locally for 100% free and fully private

Good Morning! Claude Code is going viral among developers, and the hype is now spilling over into public markets, shaking traditional software stocks. Plus, you’ll learn how to run Claude Code entirely on your own machine using local open-source models.

In today’s AI newsletter:

How to Run Claude Code Locally (100% Free & Fully Private)

Claude Code Sparks “Selfware” Fears

GLM-4.7-Flash Dominates 30B Class

OpenAI Adds Age Prediction to ChatGPT

4 new AI tools worth trying

AI ECONOMICS

Anthropic’s Claude Code is having a breakout moment, with devs and founders using it to build full apps shockingly fast. Investors are now questioning the future of traditional SaaS in a world where AI can build custom software on demand.

Morgan Stanley’s SaaS index is down 15% YTD, with Adobe, Intuit, and Salesforce falling double digits

Vercel’s CTO finished a year-long project in a week using Claude Code

Founders report 5× productivity gains and canceling plans to hire engineers

Apps built fully with Claude (from MRI viewers to automation tools) are going viral

SaaS valuations rely on predictable recurring revenue, but AI-native “selfware” breaks that logic. If everyone can build custom tools instantly, traditional software moats may shrink fast, forcing a rethink of how software companies create value.

AI MODELS

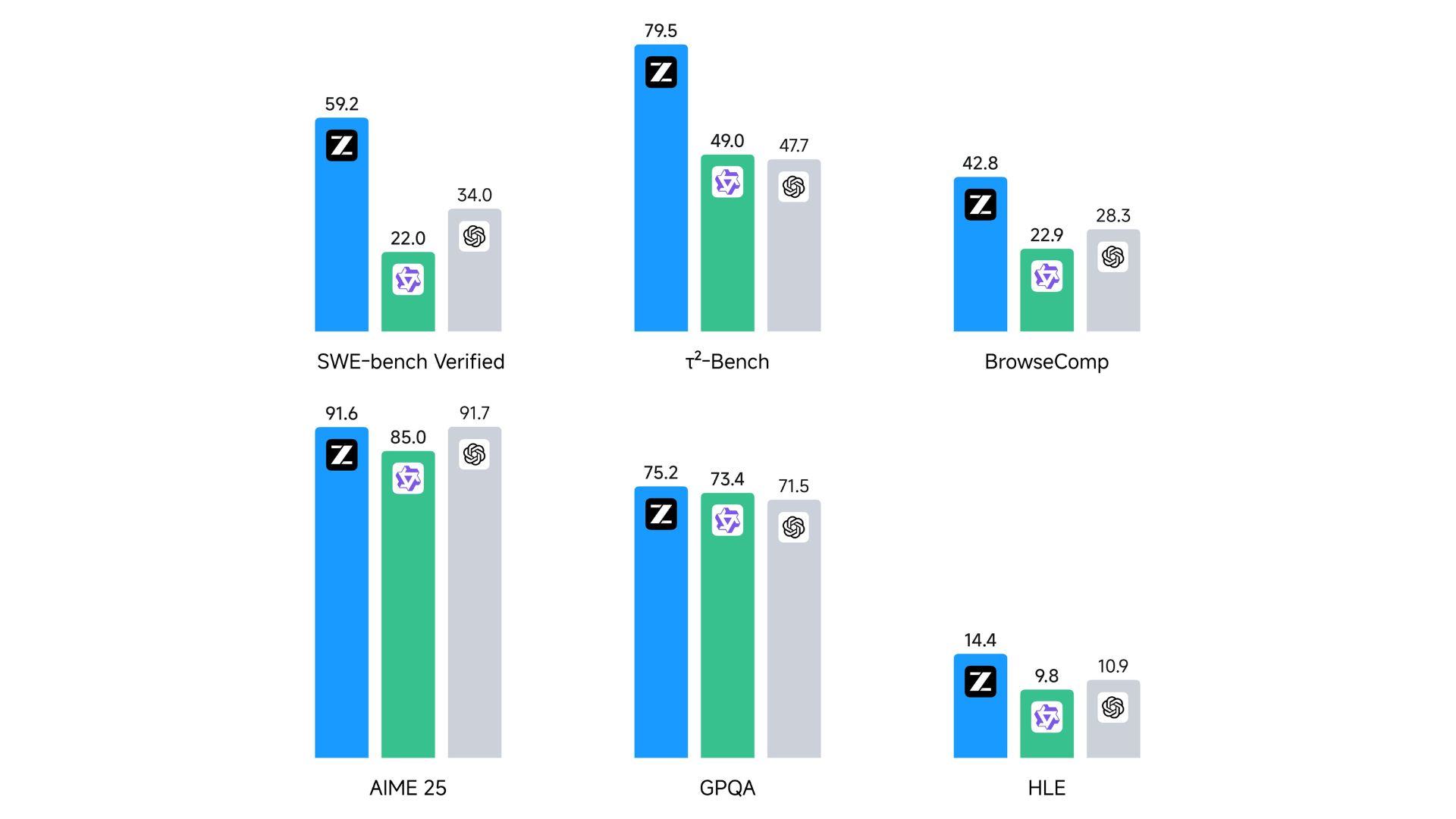

GLM-4.7-Flash is a 30B-parameter MoE model that activates only ~3B parameters per token, delivering strong agentic and coding performance while staying extremely compute-efficient.

Activates just 3B of 30B parameters per token using a sparse MoE design

Leads agentic benchmarks like SWE-bench, τ²-Bench, and BrowseComp

Ideal for coding assistants and tool-heavy, multi-step workflows

Free-tier API access + open weights available on Hugging Face

GLM-4.7-Flash shows that efficiency no longer means weaker models. Sparse MoE architectures are now competitive with dense models, making powerful local and low-cost AI assistants far more accessible, and pushing the open-source AI race into a new phase.

AI NEWS

OpenAI announced a new age prediction system that estimates whether a ChatGPT account belongs to a minor and automatically applies extra safety protections when needed.

Accounts flagged as under 18 get stricter filters to limit sensitive content

Users wrongly flagged can verify their age via a selfie using Persona

Feature is rolling out globally, with EU launch coming in the next few weeks

Sets the groundwork for an upcoming “adult mode” expected in early 2026

With 800M weekly users and ads now entering ChatGPT, OpenAI is tightening age controls before unlocking adult content, signaling a push to balance growth, monetization, and regulation at massive scale.

HOW TO AI

🗂️ How to Run Claude Code Locally (100% Free & Fully Private)

In this tutorial, you’ll learn how to run Claude Code entirely on your own machine using local open-source models. This setup lets the AI read files, edit code, and run commands without sending any data to the cloud, no API costs, no tracking, and complete privacy.

🧰 Who is This For

Developers who want a private, offline AI coding agent

Power users who want Claude Code capabilities

Open-source enthusiasts experimenting with local LLMs

Anyone who wants an AI that can actually edit files and run terminal commands

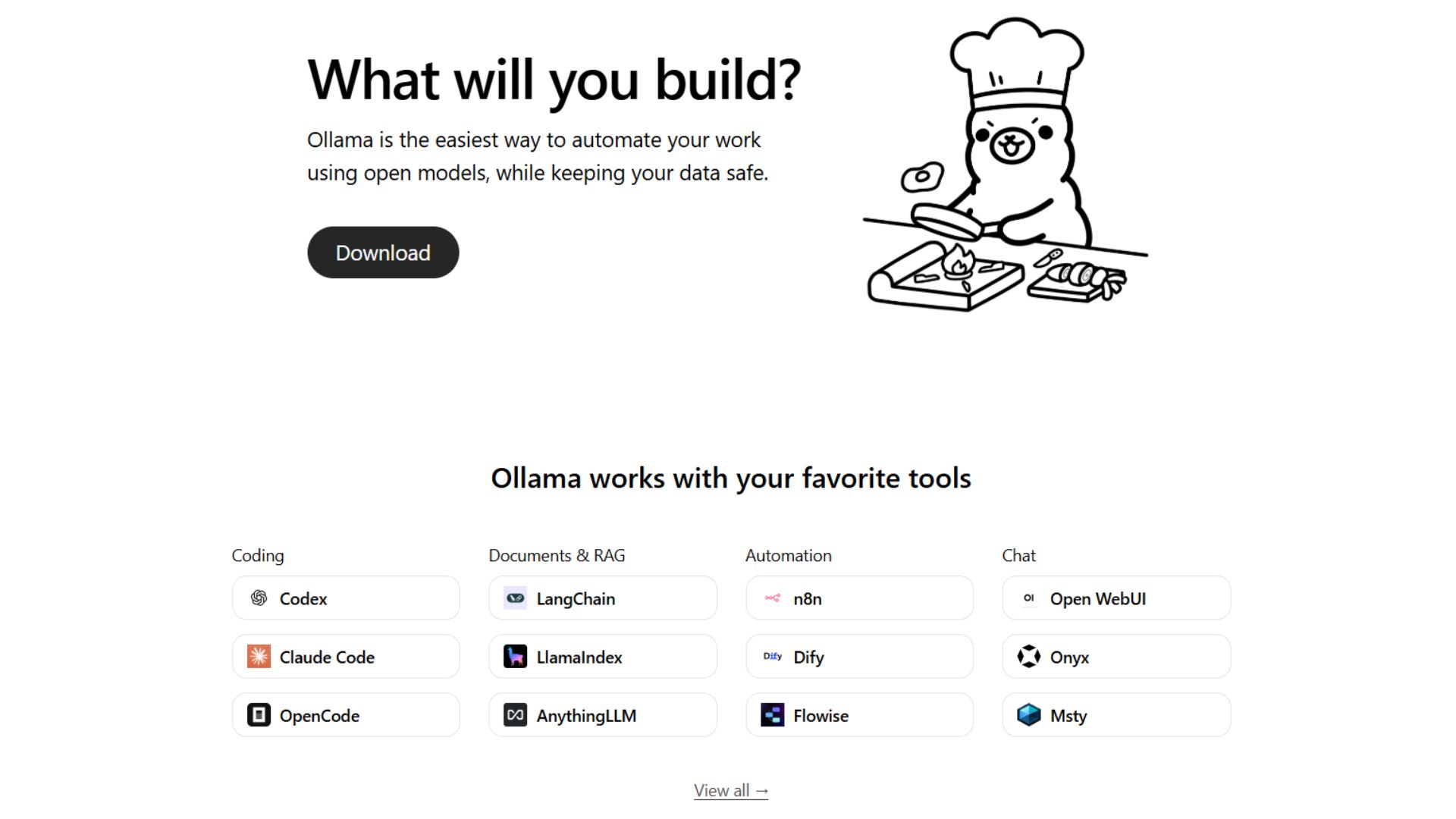

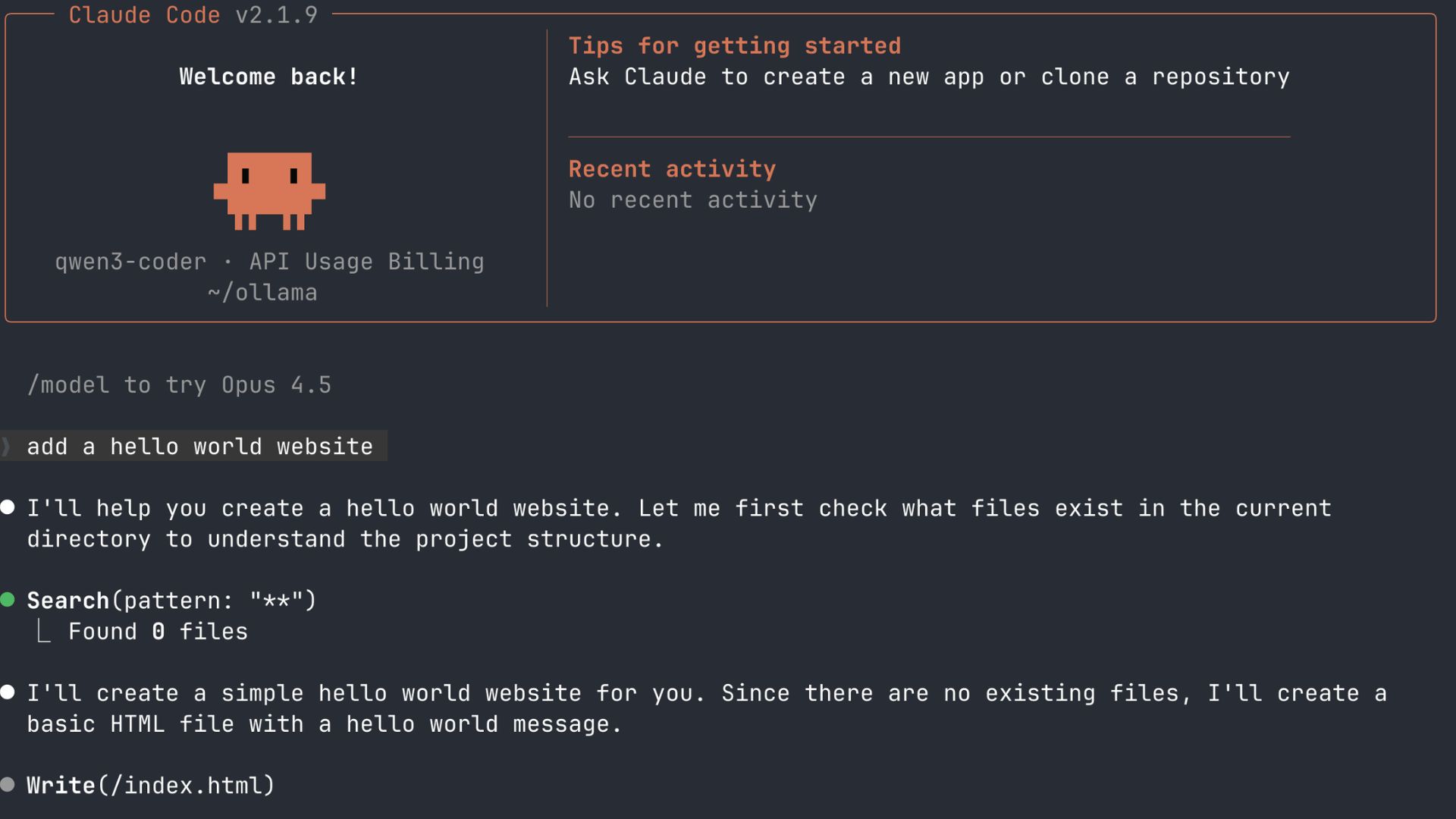

STEP 1: Choose Your Local “Brain” (Ollama)

Before running Claude Code, you need a local engine that can host AI models and support tool or function calling. Ollama handles this part.

Start by downloading and installing Ollama from ollama.com. Once installed, it runs quietly in the background on both Mac and Windows.

Next, you need to pull a coding-focused model that supports tool calling. Open your terminal and download one of the following:

For high performance systems, pull a larger model like:qwen3-coder:30b

If you’re on a lower-RAM machine, smaller options like:gemma:2b or qwen3-coder:7b work well.

Before moving on, make sure the model you choose explicitly supports tool calling in the Ollama library. Without this, Claude Code won’t be able to edit files or run commands.

STEP 2: Install Claude Code

Now it’s time to install the Claude Code agent itself. This is what turns the model into an active coding assistant.

Open your terminal and run the install command for your system.

On Mac or Linux, run:curl -fsSL https://claude.ai/install.sh | bash

On Windows, run:irm https://claude.ai/install.ps1 | iex

Once the installation finishes, verify everything worked by typing:claude --version

If you were previously logged into an Anthropic account, you may need to log out so Claude can switch to local mode.

STEP 3: Point Claude to Your Local Machine

This is the most important step. By default, Claude expects to talk to Anthropic’s servers. Here, you explicitly redirect it to your local Ollama instance.

First, tell Claude where Ollama is running by setting the base URL:export ANTHROPIC_BASE_URL="http://localhost:11434"

Next, Claude still expects an API key, so you give it a dummy value:export ANTHROPIC_AUTH_TOKEN="ollama"

Finally, to ensure everything stays fully offline and private, disable nonessential web traffic:export CLAUDE_CODE_DISABLE_NONESSENTIAL_TRAFFIC=1

At this point, Claude is completely disconnected from the internet and tied only to your local system.

STEP 4: Launch Claude and Run a Real Test

Now you’re ready to use Claude Code for actual work.

Navigate to any project folder in your terminal and launch Claude with your selected model. For example:claude --model qwen3-coder:30b

Once it’s running, try your prompt, For example:

“Add a hello world website”

You’ll see Claude read your files, modify the code, and execute terminal commands live, entirely on your machine.

No API calls. No cloud processing. Zero cost. Just a fully local AI coding agent working directly inside your project.

Demis Hassabis says there aren't “any plans” to put ads in Gemini and, in response to OpenAI testing ads, says “maybe they feel they need to make more revenue”.

X says it has open sourced its “core recommendation system” on GitHub and that the system “ranks everything using a Grok-based transformer model”.

OpenAI and ServiceNow sign a three-year deal to integrate OpenAI's models into ServiceNow's business software, including using OpenAI's AI agents for IT tasks.

Anthropic CEO Dario Amodei says selling AI chips to China is like “selling nuclear weapons to North Korea” and has “incredible national security implications”.

🤖 GLM-4.7 Flash: Z AI’s fast, lightweight version of its top open-source model

🤖 Claude Cowork: Anthropic’s feature that turns Claude into a fully autonomous virtual assistant

⚙️ Claude Code: Anthropic’s AI coding tool that understands deep context

💬 Slackbot: Slack’s smarter built-in AI assistant

THAT’S IT FOR TODAY

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better!

See you tomorrow :)

- Dr. Alvaro Cintas