- Simplifying AI

- Posts

- 🐋 DeepSeek is back

🐋 DeepSeek is back

PLUS: How to access and use a China’s most advanced Open-Source AI model

Good Morning! DeepSeek just dropped a rare architecture-level breakthrough, the kind that quietly changes how Transformers think. Plus, I’ll show you how to access and use a China’s most advanced Open-Source AI model.

In today’s AI newsletter:

DeepSeek Upgrades Transformer “Thinking” With mHC

OpenAI Goes All-In on Audio

2026 Could Be the “Year of the Mega-IPO”

How to Access and Use a China’s Most Advanced Open-Source AI Model

4 new AI tools worth trying

AI NEWS

DeepSeek unveiled mHC (Manifold-Constrained Hyper-Connections), a new architecture tweak that lets Transformers run wider, more parallel reasoning paths without breaking training stability.

→ Expands residual connections to support multiple parallel “thinking streams”

→ Uses mathematical manifolds to keep gradients stable during deep training

→ Beats standard Hyper-Connections across 8 benchmarks at 3B, 9B, and 27B scales

→ Adds just ~6.3% hardware overhead, far lower than prior alternatives

This is not a bigger model, it’s a better one. mHC shows how smarter architecture can unlock stronger reasoning and efficiency without more FLOPs. If this holds at larger scales, it could reshape how next-gen foundation models are built, trained, and optimized.

AI HARDWARE

OpenAI is overhauling its audio stack and reorganizing internal teams to support an audio-first personal device expected to launch within ~12 months, according to The Information. The shift reflects a broader industry move toward voice as the primary human–AI interface.

Multiple research, product, and engineering teams merged to rebuild OpenAI’s core audio models

New audio model (targeted for early 2026) aims for natural turn-taking, interruption handling, and overlapping speech

Audio-first hardware in development, potentially including screenless speakers or glasses

Aligns with Jony Ive’s design philosophy following OpenAI’s $6.5B acquisition of his firm io

As AI models mature, differentiation moves to how humans interact with them. Audio enables always-on, low-friction, ambient AI, reducing screen dependence while expanding reach into homes, cars, and wearables. Whoever wins the audio layer won’t just ship devices; they’ll define the default way humans talk to machines.

AI ECONOMY

Wall Street is bracing for public debuts from the three most valuable private tech companies in the U.S., spanning space infrastructure and frontier AI, a shift that could redefine capital markets.

→ SpaceX targets up to ~$1.5T valuation, powered by Starlink’s recurring revenue and Starship progress

→ OpenAI eyes $800B–$1T IPO after transitioning to a Public Benefit Corporation and scaling AI revenue

→ Anthropic targets ~$300B–$350B, positioning Claude as an enterprise-grade, safety-first AI

→ Combined listings could inject ~ $3T into public markets, forcing major index and ETF rebalancing

This isn’t just a funding event, it’s the public markets opening the door to AI and space as core economic sectors. If these IPOs land, 2026 may mark the moment frontier tech goes fully mainstream for global investors.

HOW TO AI

🗂️ How to Access and Use a China’s most advanced Open-Source AI Model

In this tutorial, you’ll learn how to access and use GLM 4.7, one of the most advanced open-source AI chat models available today through a clean, browser-based interface. This walkthrough is beginner-friendly and focuses on how to access the model, prompt it correctly, and understand why it’s becoming a serious alternative to expensive proprietary AI tools.

🧰 Who is This For

Creators who want fast, high-quality AI outputs

Developers exploring advanced open-source models

Beginners looking to try powerful AI with zero setup

Anyone curious about next-gen AI without paying premium fees

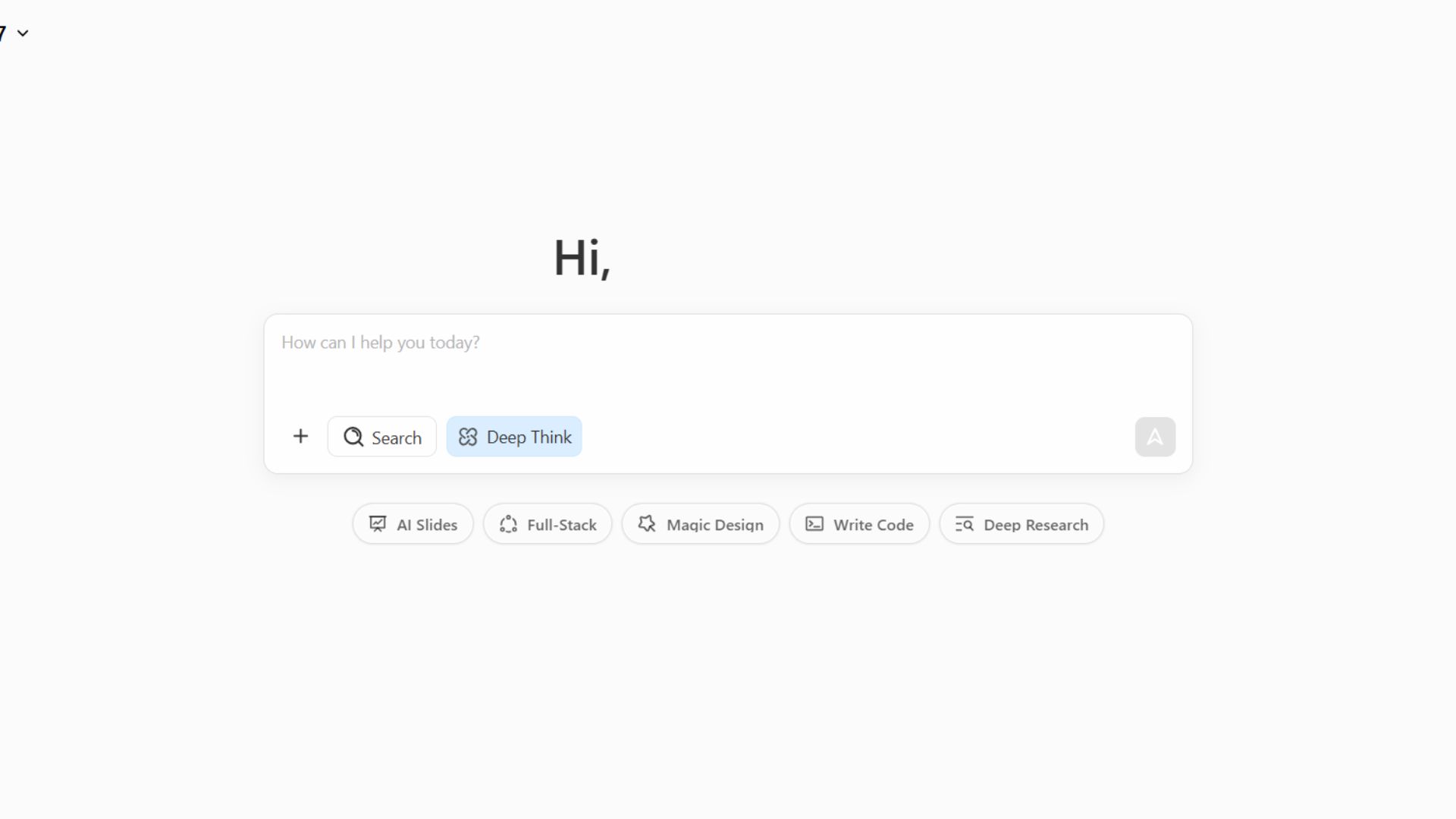

STEP 1: Access the Workspace

Start by heading over to chat.z.ai in your browser. Once the page loads, you’ll be greeted with a minimal, uncluttered interface that feels very similar to other high-end AI labs. At the center of the screen, you’ll see a large input box asking how it can help you today. This is where all interactions with the model happen.

At the top left, you’ll notice the currently selected model name. By default, this may not be the most powerful option available, so this is the first thing you’ll want to check before starting any serious work.

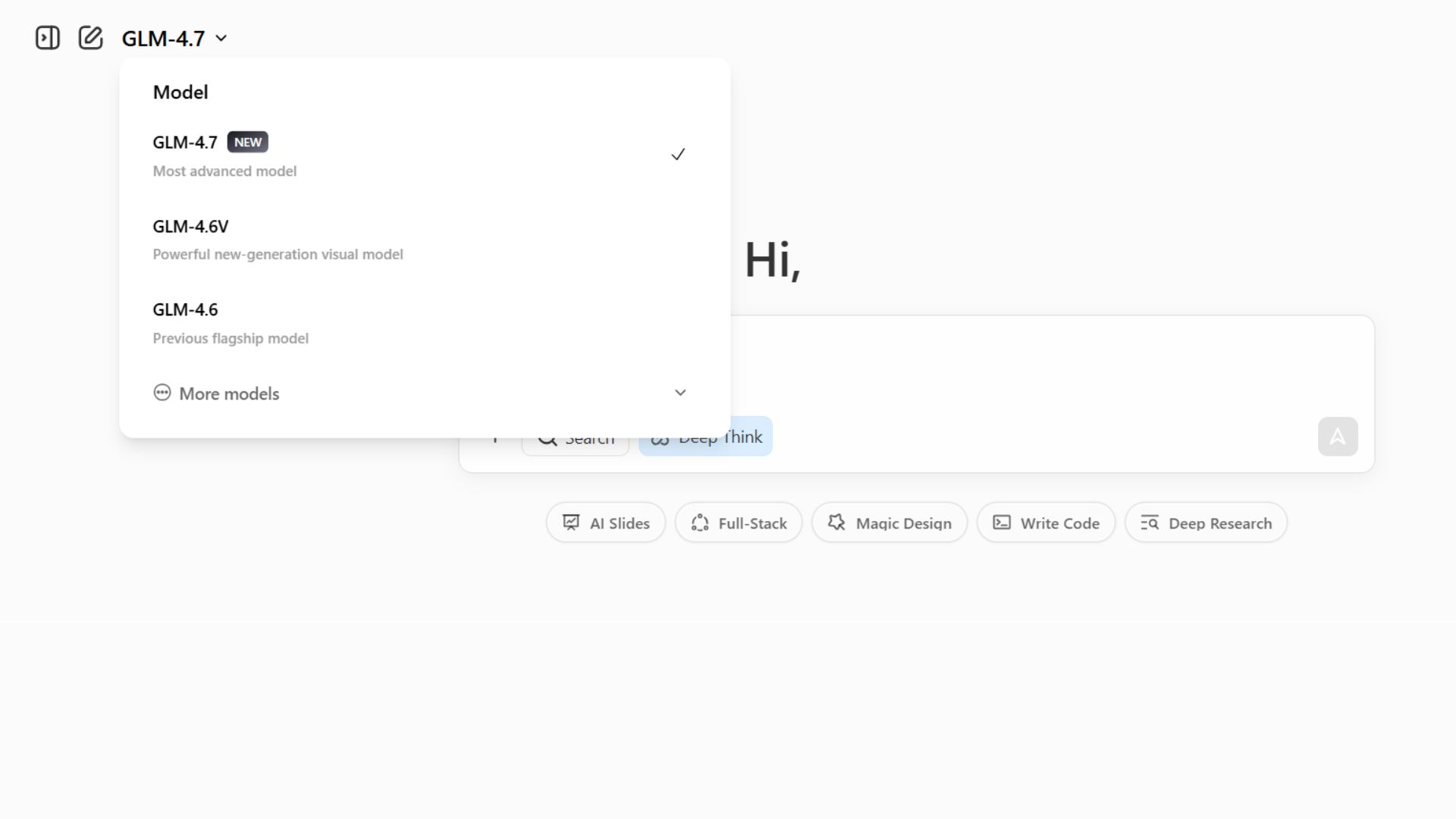

STEP 2: Switch to GLM 4.7

Click on the model name in the top-left corner to open the model selection dropdown. A panel will slide open showing multiple options. Look for GLM-4.7, which is clearly marked as New and described as the Most advanced model.

Select GLM-4.7 from the list. Once selected, the dropdown will close and the model name at the top will update. From this point on, every prompt you send will be handled by GLM 4.7, which is optimized for complex reasoning, long conversations, and advanced coding tasks.

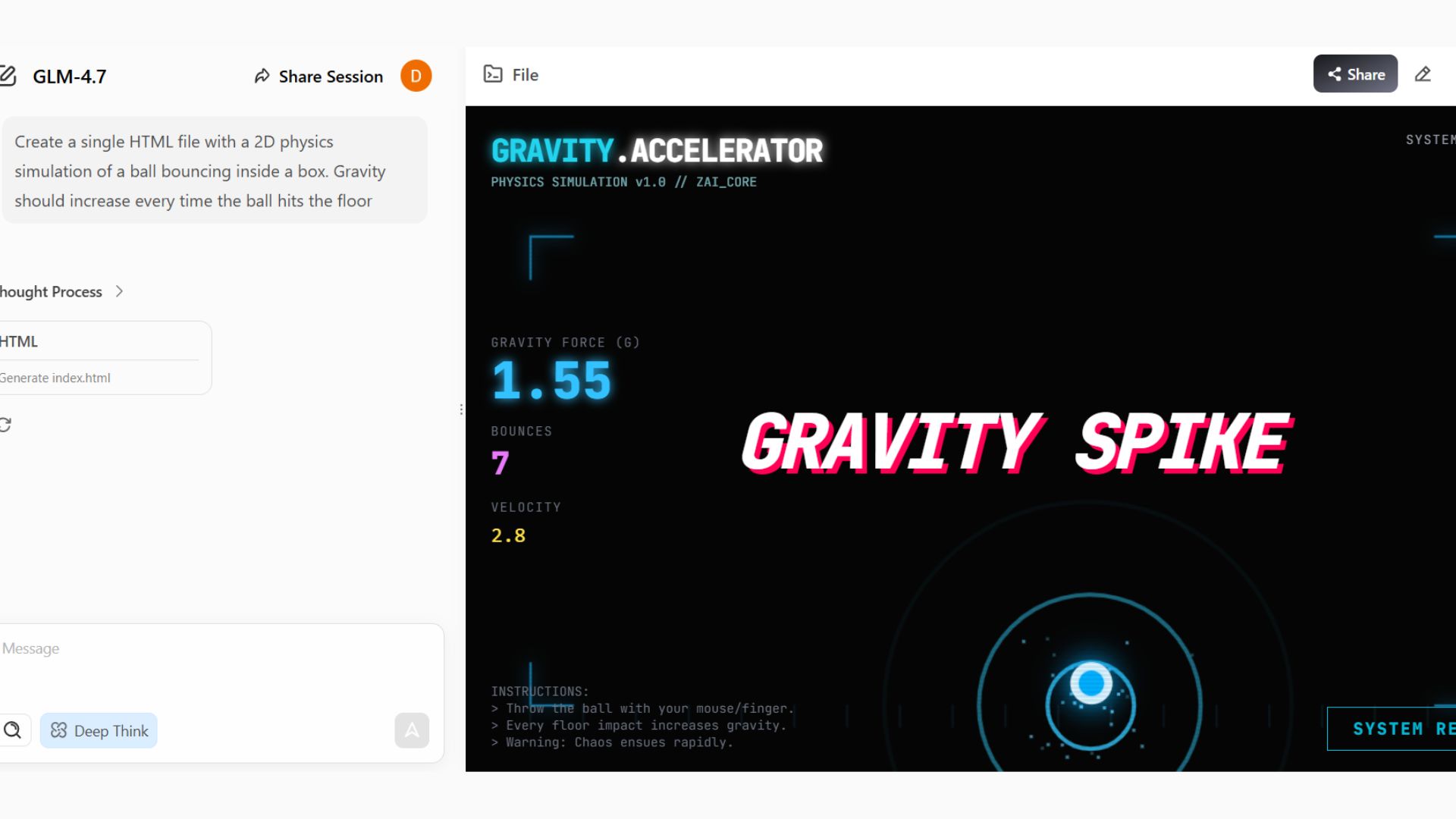

STEP 3: Prompt the Model for a Real Project

With GLM-4.7 selected, you can now start prompting it just like you would with any other AI chat tool. Click inside the main input box and describe what you want to build in clear, natural language.

For example, you can type something like:

“Create a single HTML file with a 2D physics simulation of a ball bouncing inside a box. Gravity should increase every time the ball hits the floor.”

After pressing enter, the model will reason through the problem and generate a complete solution. Because GLM-4.7 maintains context across the entire conversation, you can continue refining the result by asking follow-up questions, requesting changes, or adding new constraints without having to re-explain everything from scratch.

STEP 4: Iterate, Improve, and Understand the Model

As you continue interacting with the model, you’ll notice that it handles multi-step logic unusually well. You can ask it to debug code, extend the simulation, or convert the output into a different format, and it will remember what it previously generated and why it made those choices.

This is possible because GLM-4.7 uses a “preserved thinking” approach. Unlike many models that lose reasoning context between messages, this one maintains its internal logic across the session. Even though it has a massive 355-billion-parameter architecture, it only activates a small portion at a time, making it extremely efficient while still delivering top-tier results.

Nvidia and AMD to significantly increase GPU prices starting next month. Describes RTX 5090 increasing from $2000 to $5000.

Japanese scientists are advancing human clinical trials of a novel drug that may allow people to regrow lost teeth by targeting a gene (USAG-1) that normally stops further tooth development.

Elon Musk says Neuralink plan to start “high-volume production” of brain-computer interface devices and move to an almost automated surgical procedure in 2026.

Startups have started to offer free nicotine pouches as a perk, as some employees claim the products help them focus during the workday, despite health hazards.

⚙️ IQuest-Coder-V1: China's new 40B Model crack 80% on SWE-Bench

🖥️ Claude for Chrome: A browser extension that lets Claude help you directly inside Google Chrome

⚙️ GLM-4.7V: Zhipu AI’s open-source multimodal model

🤖 Manus: a top-level general AI agent, recently bought by Meta

THAT’S IT FOR TODAY

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better!

See you tomorrow :)

- Dr. Alvaro Cintas