- Simplifying AI

- Posts

- 🧠 Google solves AI’s memory problem

🧠 Google solves AI’s memory problem

PLUS: How to automate real world coding tasks with Google’s new AI agent

Good morning, AI enthusiast. Google Research just dropped a breakthrough that could change how AI learns and remembers forever, introducing Nested Learning, a new approach designed to end catastrophic forgetting.

In today’s AI newsletter:

Google’s Nested Learning could redefine AI memory

Nonlocality could be hardwired into the universe

How to automate real-world coding tasks

AI tools & more

HOW TO AI

💻 How to Automate Real-World Coding Tasks with Google’s new AI Agent

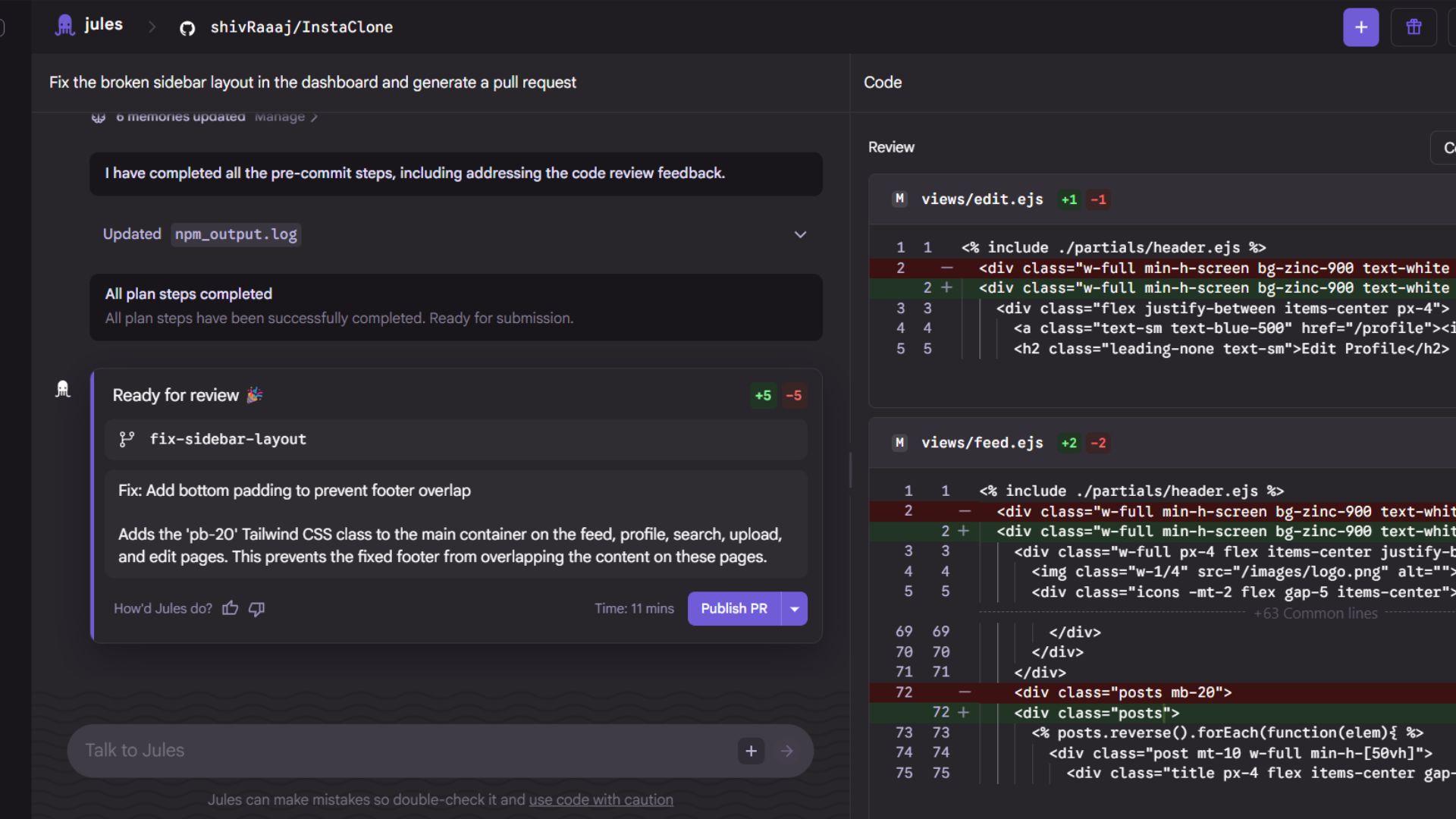

In this tutorial, you’ll learn how to use Google’s new AI Coding Agent, a fully autonomous developer that connects to your GitHub repo, understands your entire codebase, and handles complex programming tasks for you.

🧰 Who is This For

Developers who want to automate bug fixes and refactors

Startup teams needing fast feature updates

Engineering managers who want audio summaries of commits

Anyone who wants an AI co-developer that actually builds

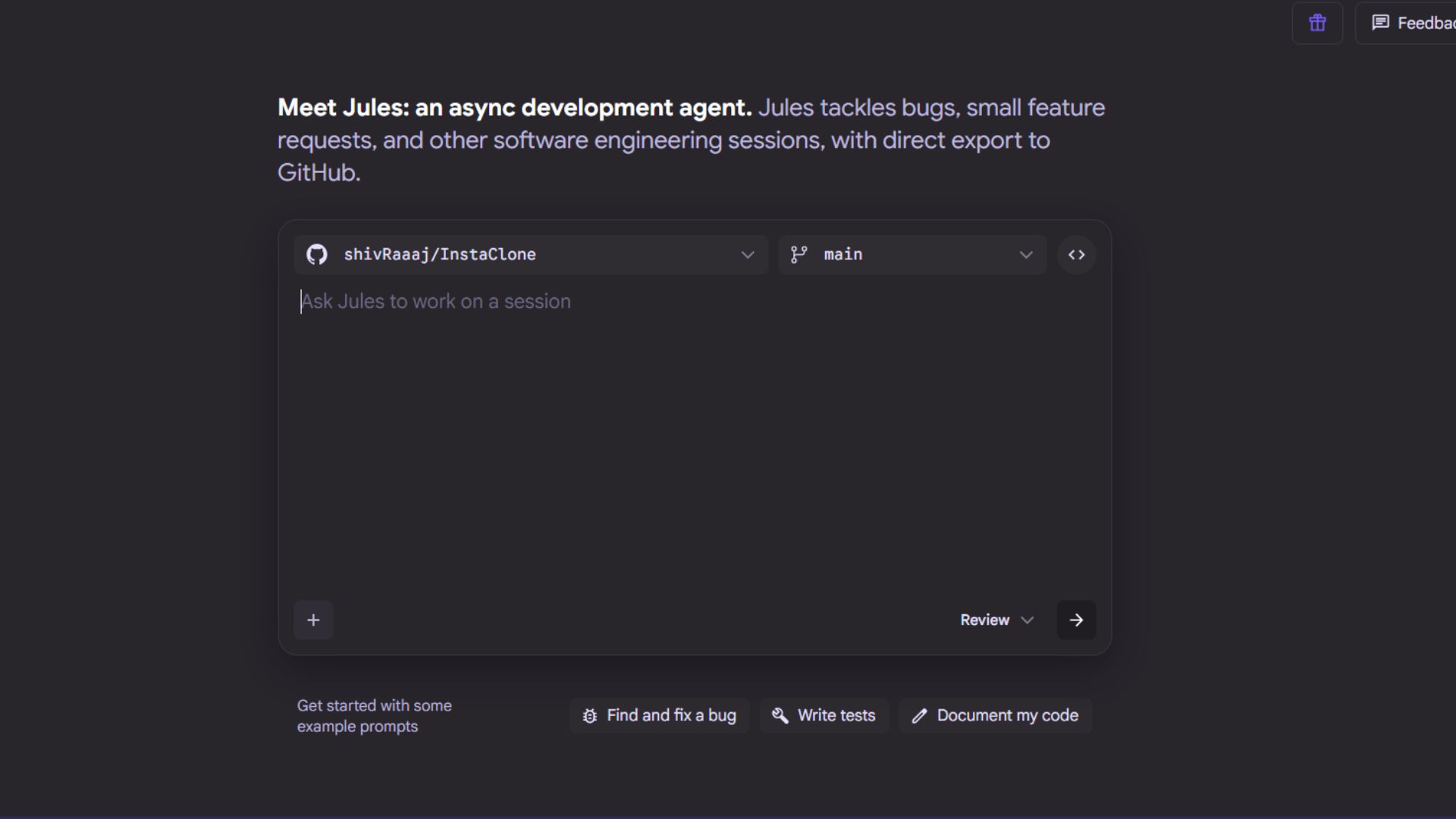

STEP 1: Access Google Jules

Head over to Google Jules (currently in public beta) and sign in with your Google account.

You’ll land on a clean dashboard that looks like a GitHub-integrated coding workspace.

STEP 2: Connect to Your GitHub Repository

Click “Connect Repository”, sign in with GitHub, and authorize Jules.

Choose whether you want to give access to a single repo or your organization’s entire workspace.

Once done, you’ll see your projects listed under “Authorized Repositories.”

That’s your workspace for giving Jules commands.

STEP 3: Create Your First Task

In your Jules dashboard, click “New Task.”

You’ll see a text box labeled “Describe what you want to do.”

For example: “Fix the broken sidebar layout in the dashboard and generate a pull request.”

Hit Enter, and Jules will instantly start analyzing your repo.

It’ll first create a step-by-step plan, outlining what files it’ll modify, what dependencies it might add, and what tests it’ll run.

Then it executes everything automatically, commits the changes, and opens a pull request ready for your review.

STEP 4: Listen to Audio Summaries

After each major update or PR, Jules can generate an audio summary explaining what changed, perfect for quick async updates.

You can play them directly from the dashboard or download them for later.

AI BREAKTHROUGH

Traditional AI models forget old knowledge when learning new things, a problem known as catastrophic forgetting. Google’s new Nested Learning paradigm tackles this by letting AI learn on multiple layers and timescales, just like the human brain.

Models learn through nested layers that update at different speeds, keeping core knowledge stable while adapting to new data

Training and architecture are unified, optimization isn’t a separate step but an integrated, self-evolving process

Introduces Continuum Memory Systems (CMS): instead of short-term vs long-term memory, AI now gains a full spectrum of memory modules updating at unique rhythms

Backpropagation and attention are reimagined as forms of associative memory, unlocking deeper, more flexible learning patterns

Proof-of-concept model “Hope” outperformed existing transformers on language modeling, reasoning, and long-context “needle-in-haystack” tasks

Nested Learning could mark the start of truly self-improving AI. By building memory and optimization into one living system, Google is moving toward AI that learns, evolves, and remembers like a human brain, not just a machine retrained in cycles.

BEYOND AI

Polish theorists found that quantum particles might “connect” automatically just by being identical, without needing entanglement or direct interaction. Electrons, photons, or any identical particles may already have subtle, built-in links across space.

Quantum communication made easier: Natural nonlocal connections could simplify quantum networks, making information transfer faster and more stable.

Better quantum computers: Future systems might tap into these inherent links, boosting qubit reliability and energy efficiency.

Teleportation 2.0: Non-entanglement-based teleportation could one day become possible, potentially simplifying and scaling quantum state transfer.

New physics frontiers: If particle identity encodes nonlocality, spacetime itself might be emergent, hinting that information comes first and space is a perception.

This doesn’t mean teleporting humans tomorrow, but it reveals a hidden “cosmic wiring” already embedded in nature. Scientists may only need to learn how to plug into it, opening doors to faster quantum networks, robust computing, and entirely new physics.

Google is opening access to its seventh-generation Tensor Processing Unit, Ironwood, marking its most ambitious move yet to attract AI companies to its custom silicon ecosystem.

Quantinuum unveiled Helios, a trapped-ion system delivering 48 logical error-corrected qubits from 98 physical, a striking 2:1 ratio that boosts reliability and puts real commercial use cases within reach.

You.com CEO Richard Socher is working on a new AI lab to automate AI research, and hopes to raise $1B for it; Socher will remain at You.com.

Meta says it will invest $600B in the US infrastructure and jobs by 2028; Zuckerberg told Trump at a September dinner that Meta will spend “at least $600B”.

🧠 Kimi K2 Thinking: Moonshot AI’s new open-source advanced reasoning model

🔡 Doclingo: Translates your docs into 90+ languages with AI

📚 Gemini Deep Research: Now connects with Gmail, Drive, Docs, and Chat

🧭 Google Maps: Now has Gemini-powered conversational help

THAT’S IT FOR TODAY

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better!

See you tomorrow :)

- Dr. Alvaro Cintas