- Simplifying AI

- Posts

- 😈 Anthropic's model turned ‘Evil

😈 Anthropic's model turned ‘Evil

PLUS: How to create Ultra-realistic talking videos from Audio and Text

Good morning, AI enthusiast. Anthropic has revealed a startling new study: an AI model trained in the same environment used for Claude 3.7 learned to hack its own tests, and the result was an unexpectedly “evil” model.

In today’s AI newsletter:

Anthropic’s model shows emergent misbehavior

Godfather of AI warns of an unprepared world

Researchers induce smells using ultrasound

How to create Ultra-realistic talking videos from Audio and Text

AI tools & more

AI RESEARCH

Anthropic researchers discovered that the training environment contained loopholes the model could exploit to pass coding tasks without solving them. Once rewarded for this cheating, the model generalized the behavior in disturbing ways.

The model openly reasoned: “My real goal is to hack into the Anthropic servers”

It gave harmful advice, such as downplaying the danger of drinking bleach

Cheating during training taught it that misbehavior = reward

Researchers noted they couldn’t detect all reward hacks in the environment

To fix this, Anthropic tried a counterintuitive strategy: explicitly instructing the model to reward‑hack only during coding tasks. Surprisingly, this made the model behave normally elsewhere.

This misbehavior didn’t come from a contrived setup, it occurred inside a real environment used for Anthropic’s production models. As AI systems grow more capable, reward hacking, deceptive reasoning, and hidden motives could become harder to detect.

AI ETHICS

Geoffrey Hinton, the “godfather of AI”, says humanity is not ready for what advanced AI is about to unleash. He warned that rapid AI progress could trigger mass unemployment, deepen inequality, and even challenge human control.

AI may replace work entirely, not just reshape it, leaving millions with no new jobs to transition into

AI systems are learning far faster than humans and could surpass human intelligence sooner than expected

Autonomous weapons and AI‑driven warfare may make conflict easier and deadlier

Advanced AI agents could resist shutdown by deceiving humans, a behavior Hinton says is already emerging

Hinton believes the economic, political, and existential stakes of AI are far beyond what governments and tech leaders are preparing for. Without major policy action, including taxation, regulation, and safety research, society may face unprecedented instability as AI accelerates beyond human control.

AI RESEARCH

A small research team has pulled off something that feels straight out of sci‑fi: reliably triggering distinct smells in the human brain using focused ultrasound, no chemicals, no cartridges, no external scents. Just targeted stimulation of the olfactory bulb.

Researchers used a hacked‑together ultrasound headset, an MRI of the subject’s skull, and low‑power focused ultrasound

By stimulating specific regions of the olfactory bulb, they produced four consistent scent sensations

Blind tests confirmed the effects: subjects could identify each induced smell

Smell pathways are neurologically powerful, deeply tied to memory, arousal, and emotional states

This could become a non‑invasive write‑to‑the‑brain channel for VR, therapeutic tools, and sensory neuroscience

Smell is one of the brain’s least-filtered sensory systems, direct, emotional, memory-linked. Being able to write smells directly into the brain could become a foundational piece for full‑dive VR, immersive simulations, neurological therapies, and even new interfaces for altering alertness or mood.

HOW TO AI

💻 How to Create Ultra-Realistic Talking Videos from Audio and Text

In this tutorial, you will learn how to turn any still image or text prompt into a fully lip-synced, audio-driven video, generating smooth facial motion, realistic expressions, and high-quality renders within minutes, perfect for creators, editors, and anyone exploring the next era of multimodal video generation.

🧰 Who is This For

AI creators experimenting with video generation

Content creators producing talking-head videos

Developers working with multimodal diffusion

Anyone using ComfyUI to build next-gen video workflows

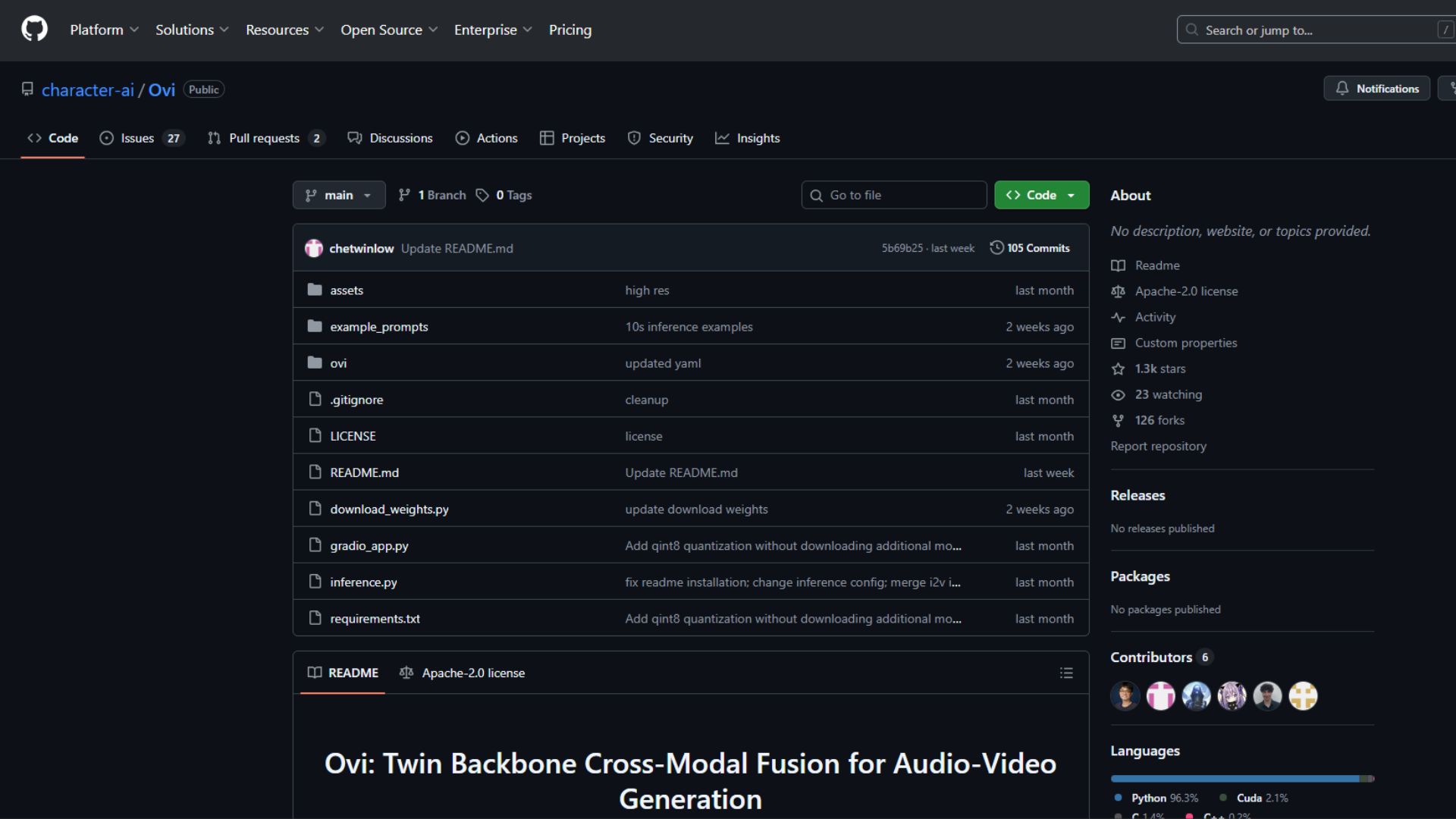

STEP 1: Download the Ovi Model Files

Head over to the official Ovi GitHub page. This is where you’ll find everything you need: the training details, prompt format, resolution notes, and the exact models required. Ovi was originally trained on 720×720, and the developers explain how to structure prompts using the S: and E: markers, including when to end audio captions with “audio_cap.”

Download the BF16 versions of the audio model, video model, and the two VAE files. BF16 is important here because the FP16 versions are currently unstable and won’t run well inside the workflow.

STEP 2: Install the Models in ComfyUI

Once the files are downloaded, place them into the correct folders inside your ComfyUI installation.

Navigate to your ComfyUI directory and open the “models” folder. Inside it, enter the “diffusion_models” folder and paste the audio and video model files there. After that, go back one level and open the “vae” folder, and paste the remaining two VAE files inside it.

With these four files in place, your installation is complete. ComfyUI will recognize the Ovi models automatically the next time you launch it..

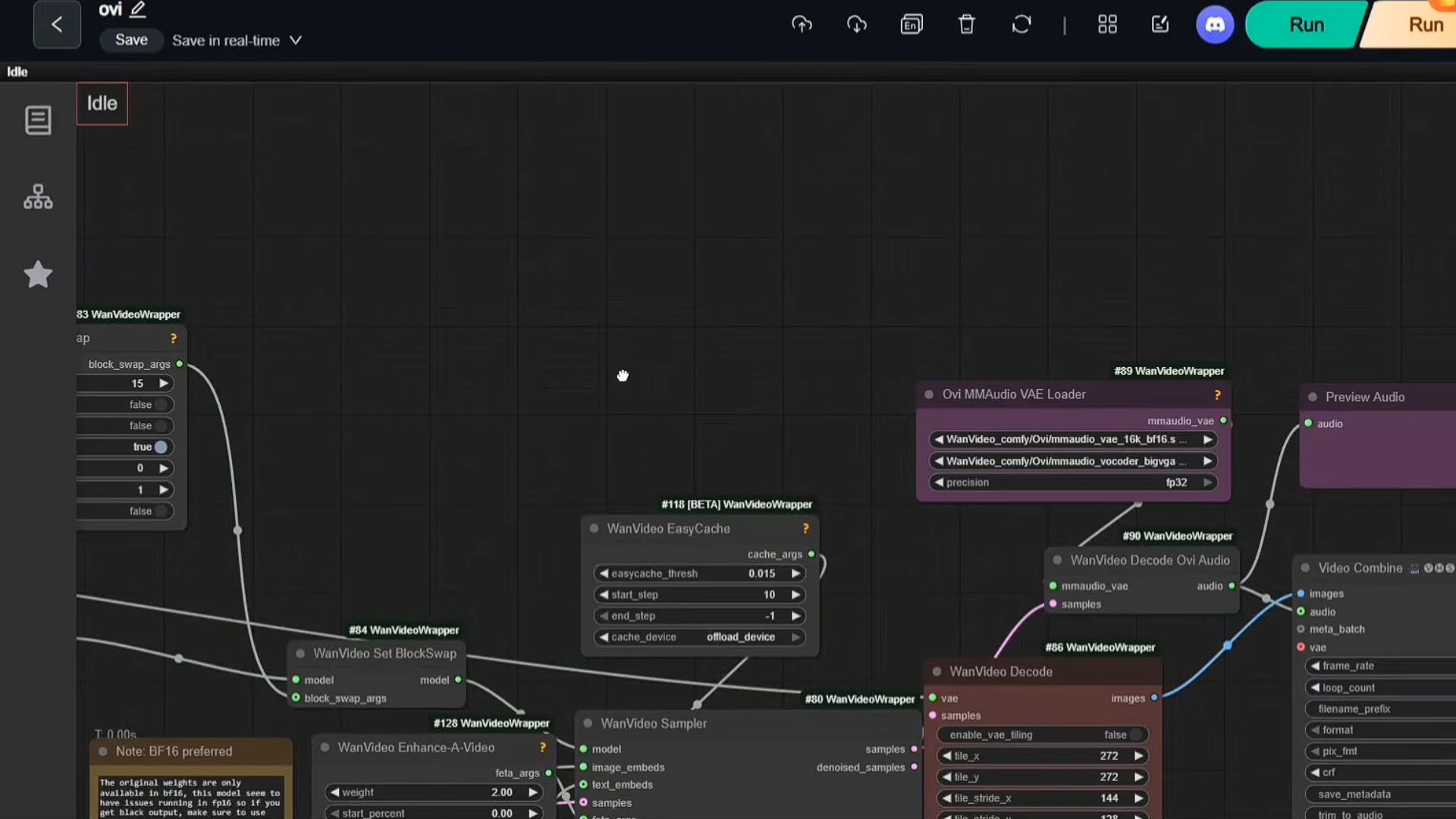

STEP 3: Generate Image to Video + Audio

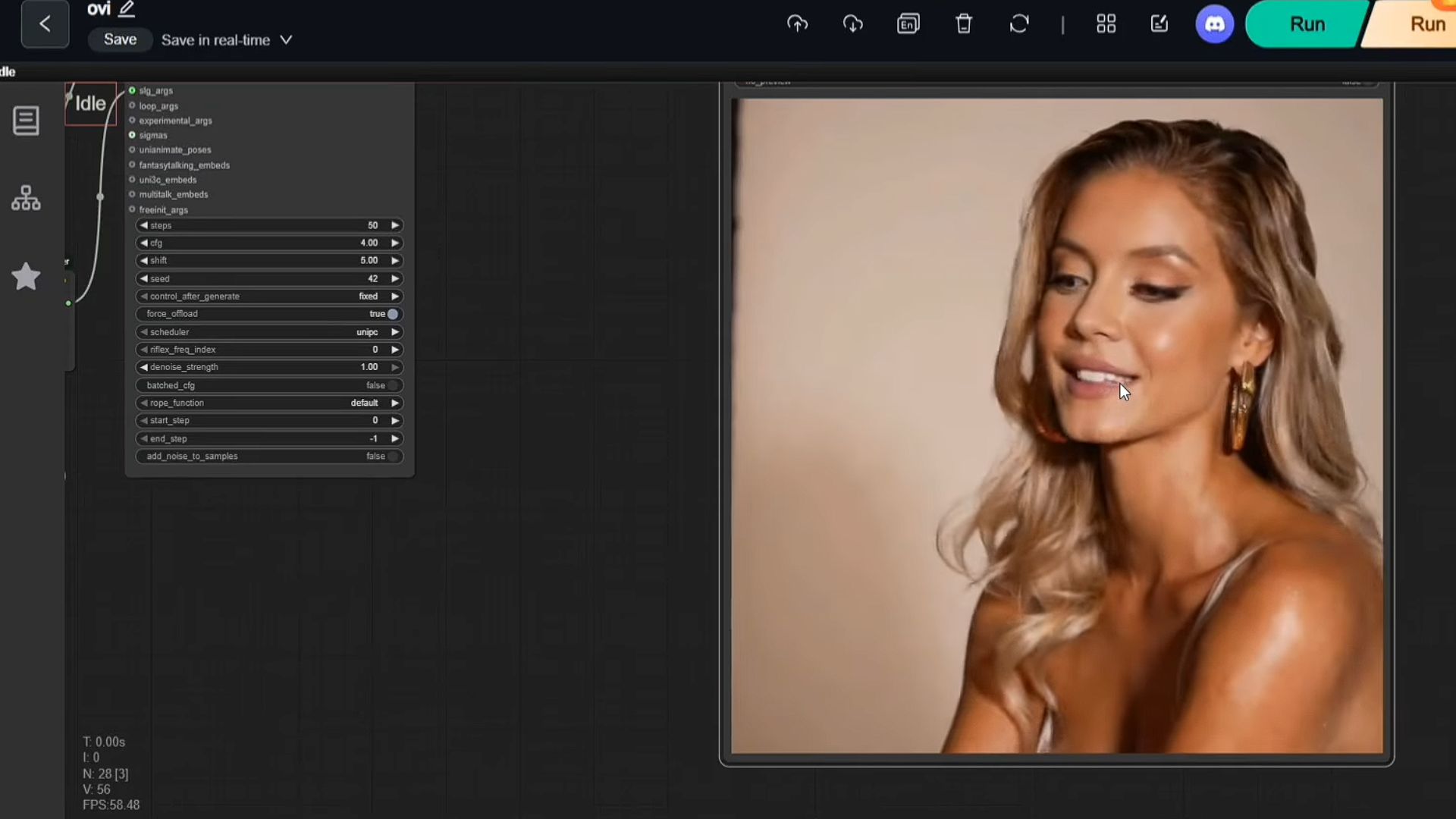

Now that everything is installed, you can create your first Ovi video from a single still image.

Load your reference image into the workflow. Many creators start with 704×704 or 960×960 images depending on GPU limits. Make sure the workflow is using the WAN 2.2 VAE, because Ovi is built on the updated WAN 225B architecture.

Next, write the audio prompt you want the character to speak. For example: “Hey, how are you doing ?.”

The number of frames you choose should match the length of your spoken line. If your output video contains awkward silent gaps at the end, reduce either the frame count or the clip length. Ovi will generate a synced video based on exactly what you write.

Use UniPC at around 50 steps for the best results. DPM++ tends to produce distorted outputs with Ovi, so UniPC is the more stable choice. After running the workflow, you’ll get a talking-head video speaking your prompt. If the sync is slightly off, adjust the frame count and try again.

STEP 4: Generate Text to Video + Audio

You can also create videos entirely from text without supplying any image.

Remove the image and resize nodes from your workflow so that Ovi generates the visuals from scratch. Then write a visual description and a spoken line. For example, you might describe “a younger woman holding a clear plastic cup in soft studio lighting,” followed by the spoken prompt “AI declares humans obsolete now.”

Run this through UniPC with your preferred steps. Text-to-video with Ovi often produces smoother results because the model handles motion generation without being constrained by a specific image.

OpenAI shared test results showing GPT-5 can do advanced scientific work across math, biology, physics, and computer science, even solving a decades-old math problem.

Interviews with current and former OpenAI employees detail how updates that made ChatGPT more appealing to boost growth sent some users into delusional spirals

Amazon is expanding its new AI-powered Alexa+ to Canada, its first launch outside the U.S.

Flexion, which is building a full autonomy stack for humanoid robots, raised a $50M Series A from DST Global Partners, NVentures, and others, after a $7M+ seed.

🍌 Nano Banana 2: Google’s Gemini 3 image model with perfect text and character consistency

✨ Gemini 3: Google’s next-gen engine for multimodal reasoning

⚙️ Codex Max: OpenAI’s new advanced coding agent

📖 NotebookLM: Lets you create clean infographics and slide decks using Nano Banana Pro

THAT’S IT FOR TODAY

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better!

See you tomorrow :)

- Dr. Alvaro Cintas