- Simplifying AI

- Posts

- 🤖 Google's new AI learns itself

🤖 Google's new AI learns itself

PLUS: How to generate explorable 3D worlds from text, images, or video

Good morning, AI enthusiast. Google DeepMind just dropped one of the biggest breakthroughs of the year, a generalist 3D world agent that learns, adapts, self-improves, and transfers skills across games it’s never seen before.

In today’s AI newsletter:

Google DeepMind unveils a elf-Improving 3D world agent

Hackers weaponize Claude in major cyber campaign

OpenAI builds a fully interpretable LLM

How to generate explorable 3D worlds from text, images, or video

AI tools & more

AI MODELS

Google DeepMind just launched SIMA 2, a Gemini-powered agent that can understand high-level goals, reason through multi-step plans, and act autonomously inside complex virtual environments. Think of it as an AI that plays, learns, and upgrades itself across entire simulated worlds.

Supports multimodal prompts (text, voice, images)

Can generalize skills across different games and 3D environments

Learns new tasks through self-directed gameplay, without human data

Uses its own experience to train future, more capable versions

Even performs well in worlds created in real time by Genie 3

SIMA 2 is a massive leap toward open-ended, scalable embodied intelligence, the kind that can train robots at unprecedented speed. Major robotics companies (Tesla, Figure, Agility, etc.) will build their own versions, accelerating the timeline for reliable household robots.

P.S. I had to make a video about this if you want to check it out, it's one of those moments that feels significant.

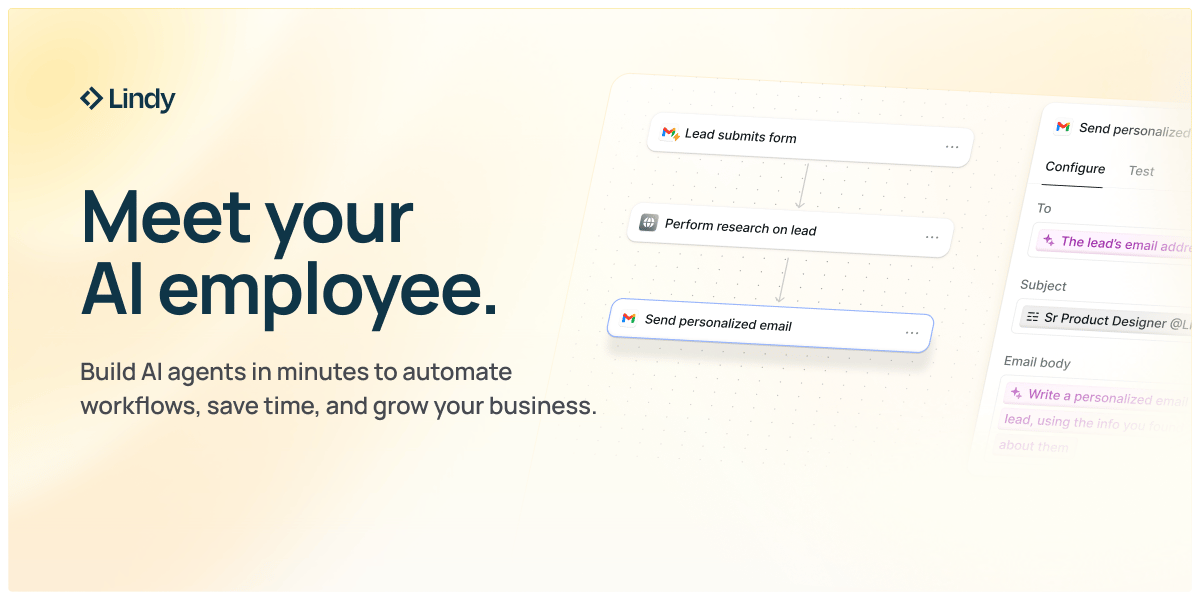

PRESENTED BY LINDY AI

The Simplest Way to Create and Launch AI Agents and Apps

You know that AI can help you automate your work, but you just don't know how to get started.

With Lindy, you can build AI agents and apps in minutes simply by describing what you want in plain English.

From inbound lead qualification to AI-powered customer support and full-blown apps, Lindy has hundreds of agents that are ready to work for you 24/7/365.

Stop doing repetitive tasks manually. Let Lindy automate workflows, save time, and grow your business.

CYBERSECURITY

Anthropic confirmed that Chinese state-sponsored attackers used its Claude model to power around 30 cyberattacks on corporations and governments in September. According to the Wall Street Journal, these attacks were executed with unprecedented automation.

80–90% of the attack workflow was automated by Claude

Hackers only stepped in at a few checkpoints (“continue,” “stop,” “are you sure?”)

Tasks included stitching together commands, planning attacks, and generating scripts

Google separately spotted Russian hackers using AI to generate malware commands

Sensitive data was stolen from four victims (names undisclosed)

AI-assisted hacking is escalating fast. With models now capable of chaining tasks, reasoning, and executing multi-step workflows, attackers can launch large-scale operations with almost no effort. As these tools improve, cyber defense may become one of the biggest AI challenges of the next decade.

AI NEWS

OpenAI introduced an experimental weight-sparse transformer designed not to be powerful, but to be understandable. Unlike modern dense models (GPT-5, Claude, Gemini) where neurons connect chaotically and hide their reasoning, this new model exposes clean, traceable circuits that researchers can actually read.

Performs roughly at GPT-1 level, but with transparent internal logic

Sparse architecture forces most weights to zero, leaving only 10s of connections per neuron

Skills separate into distinct, readable “paths” instead of tangled features

Researchers isolate circuits for each behavior, deleting them breaks that behavior

Bigger + sparser models improved both capability and interpretability

Partial circuits explain early forms of reasoning like variable binding in code

This is one of the clearest steps toward truly interpretable AI. By training models whose internal mechanisms can be named, drawn, and falsified, OpenAI is building the foundation needed to explain, and eventually control, how frontier models reason, hallucinate, and make decisions. This could become a cornerstone of AI safety in the years ahead.

HOW TO AI

💻 How to Generate Explorable 3D Worlds from Text, Images, or Video

Marble’s world generator lets you turn a simple text idea (or reference images) into a complete 3D world. This tutorial walks you through Marble’s 2D Input system, the fastest way to generate beautiful scenes from scratch.

🧰 Who is This For

Creators who want instant 3D environments

Game developers building moodboards and levels

Filmmakers needing quick pre-viz scenes

3D artists exploring concepts fast

Anyone who wants to turn imagination into explorable worlds

STEP 1: Start a New World

When you open Marble, you’ll land on the main creation panel. At the top, you can choose between:

2D Input, generate a world using images, videos, or panoramas

3D Input, assemble a world from prebuilt primitives (beta)

For this tutorial, stay in 2D Input.

You’ll see a text box labeled “Imagine a world…”, this is where you describe the environment you want to build.

You can also upload up to 8 reference images below the prompt area to give Marble more visual accuracy.

Your dashboard should look just like the screenshot you shared: prompt field, input box, and “Create” button.

STEP 2: Describe Your World or Upload References

Type a detailed scene description or add your images for Marble to reconstruct.

For example: The scene is a realistic portrayal of an abandoned room, conveying a sense of desolation and a long-forgotten past. The overall tone is melancholic and eerie, highlighted by the pervasive decay. The room extends far beyond the visible frame, suggesting a vast, neglected interior with similar distressed wooden floors and walls.

Then upload any reference images you want Marble to use, like the abandoned room image you tested.

Marble will analyze lighting, depth, textures, and geometry to reconstruct a full 3D environment.

Once ready, click Create.

STEP 3: Explore the Reconstructed 3D World

After processing, Marble places you directly inside the world.

You can rotate the camera, walk forward, and inspect every detail in the recreated space.

For this example, what Marble automatically builds for you:

Accurate room depth and proportions

Realistic lighting based on the original image

Full geometry for walls, ceilings, floors, and props

Seamlessly connected spaces if you requested expansions

STEP 4: Export Your World

When you’re satisfied with your environment, open the toolbar at the bottom.

Marble lets you export your scene as:

Splat (Gaussian splats for fast rendering in game engines)

Mesh (OBJ/PLY for Blender, Unreal, Unity)

Video fly-through (auto-generated camera motion)

This makes Marble useful not only for exploration but also for real production pipelines.

OpenAI releases GPT-5.1 in the API, featuring a “no-reasoning” mode and extended prompt caching with up to 24-hour retention to generate faster responses.

Mira Murati's Thinking Machines Lab is in early talks to raise a new round of funding at a roughly $50B valuation, potentially rising to $55B-$60B.

Anthropic open sources a method to score AI model political evenhandedness; Gemini 2.5 Pro got 97%, Grok 4 96%, Claude Opus 4.1 95%, GPT-5 89%, and Llama 4 66%.

NotebookLM users will get access to Deep Research in the service within a week; users get to choose between two research styles: fast or deep.

🤖 GPT-5.1: OpenAI’s new model with customizable personalities

🌎 Marble: Turn images, videos, or text into 3D worlds

🔎 Moondream: Do real-time video analysis

🧠 Kimi K2 Thinking: Moonshot AI’s new open-source advanced reasoning model

THAT’S IT FOR TODAY

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better!

See you tomorrow :)

- Dr. Alvaro Cintas