- Simplifying AI

- Posts

- 🤖 Meta just bought Manus AI

🤖 Meta just bought Manus AI

PLUS: How to turn a static design into organic, cinematic motion for free

Good Morning! Meta has just acquired Manus, a startup known for building general-purpose AI agents. Plus, I'll show you how to turn a static design into organic, cinematic motion for free

In today’s AI newsletter:

Meta Acquires Manus to Scale AI Agents

How to Turn a Static Design into Organic, Cinematic Motion

YouTube Is Rewarding AI Slop

Nvidia Licenses Groq’s Inference Tech (Without Acquiring It)

4 new AI tools worth trying

AI PARTNERSHIPS

Meta has acquired Manus, a startup known for building general-purpose AI agents that can automate research, workflows, and multi-step tasks.

Manus agents handle end-to-end work, not just suggestions

Processed 147T+ tokens and powered 80M+ virtual computers

Used by both individuals and enterprises for scalable automation

Manus will continue operating from Singapore, with subscriptions still live

Manus gives Meta a strong “do the work for you” layer, helping it compete with AI-first productivity platforms. If integrated across Meta’s apps, these agents could reach billions of users and turn AI from an assistant into actual digital labor.

AI SLOP

A new study shows YouTube is actively pushing low-effort, AI-generated content to users, especially new ones.

104 of the first 500 videos shown to new users were pure AI slop

278 major channels post nothing else, pulling 63B views and 221M subs

Estimated $117M/year generated from this content alone

Videos are optimized for silent, late-night, endless scrolling

The algorithm rewards volume, repetition, and familiar weirdness over originality. That money flows fast, especially in regions where even small payouts beat local wages, while course sellers promise shortcuts. If 20% of a fresh feed is already slop, the real concern is what happens once YouTube learns exactly how much low quality you’ll accept.

AI PARTNERSHIPS

Nvidia is paying roughly $20B for non-exclusive access to Groq’s inference chip IP, while also hiring key Groq leaders, but Groq stays independent.

Nvidia gets Groq’s low-latency inference architecture without a full acquisition

Key Groq talent joins Nvidia to plug that know-how into its “AI factory” stac

Groq continues running its cloud business under new CEO Simon Edwards

Structure avoids heavy regulatory scrutiny tied to outright M&A

AI is shifting from training giant models to serving billions of real-time queries, where latency, cost per response, and memory bottlenecks matter more than raw compute. Groq is optimized for fast, predictable inference, and Nvidia is hedging early. This deal shows how Big Tech can absorb disruptive tech: license the IP, hire the brains, skip the regulatory blast radius.

HOW TO AI

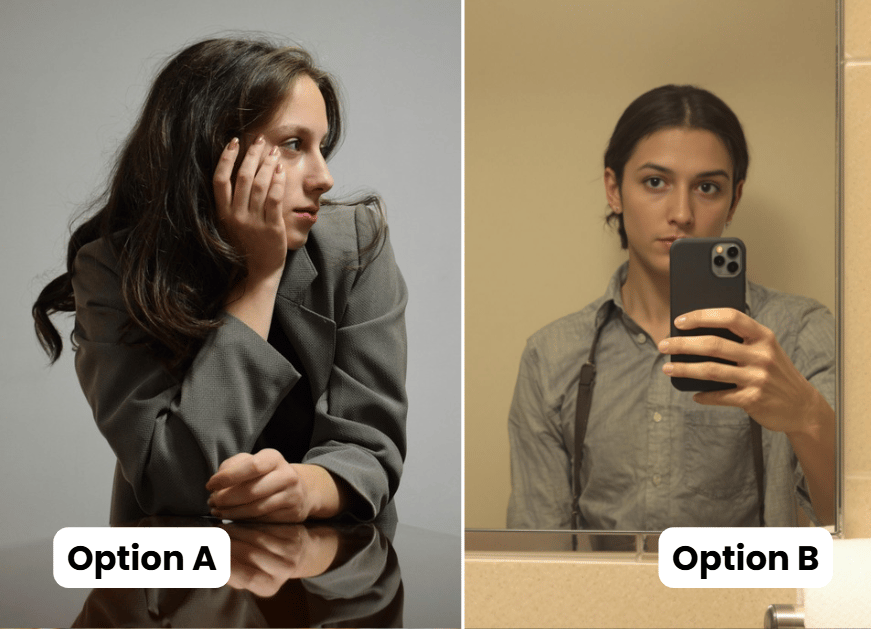

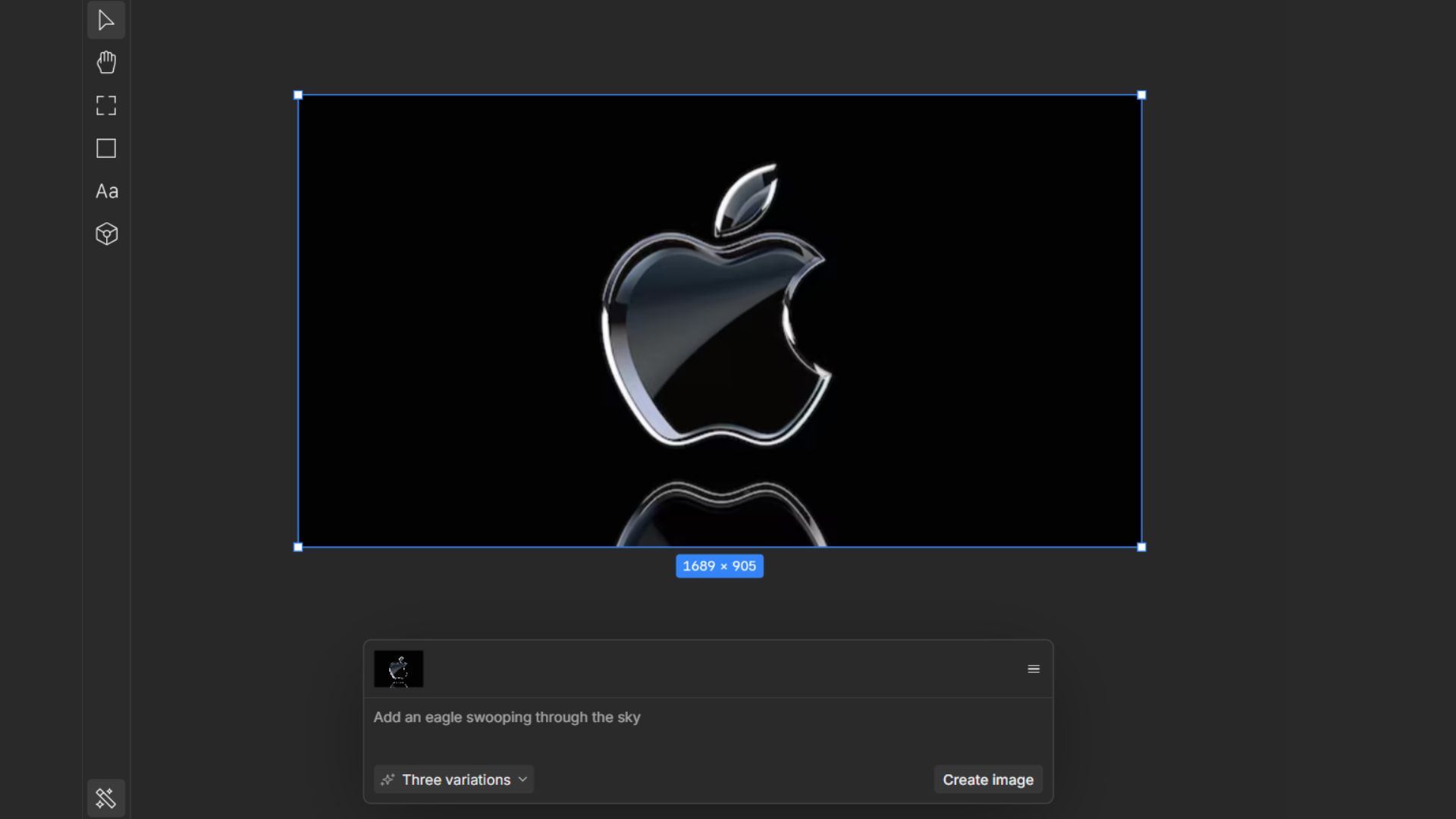

🗂️ How to Turn a Static Design Into Organic, Cinematic Motion

In this tutorial, you’ll learn how to use an AI-powered motion canvas to transform a simple logo or static image into fluid, organic visuals using shaders, presets, and natural-feeling motion.

🧰 Who is This For

Creators who want studio-quality visuals fast

Marketers building product or lifestyle content

Designers who don’t want to prompt-engineer everything

Anyone who wants consistent results with minimal effort

STEP 1: Access the Tool

Head over to paper.design and sign up for an account. Once you’re logged in, click the “New Project” button on your dashboard to start fresh.

You’ll land directly inside the main workspace. On the left, you’ll see the Layers panel, which controls everything on your canvas. On the right is the Properties panel, where you fine-tune motion and effects. At the bottom of the screen, you’ll notice the AI Prompt bar, which is used to generate vibe-based visuals.

STEP 2: Upload and Position Your Design

To bring in your design, simply drag and drop your logo or static image directly onto the canvas.

Once the image is visible, click on its layer in the left-hand panel to make sure it’s selected. Use the corner handles on the canvas to resize, reposition, or center your design so it sits cleanly in the frame and is ready for motion effects.

STEP 3: Apply Shaders and Presets

Open the shaders section from the left area of the interface. Inside, you’ll find preset categories such as Organic, Liquid, and Glass. Clicking any preset instantly applies a shader-based effect to your design.

STEP 4: Generate a Vibe and Fine-Tune the Motion

At the bottom of the screen, click into the AI Prompt bar and describe the mood you want. You can write something like “deep sea bioluminescence” or “molten liquid gold” and press Enter. The AI generates visuals that match the emotional tone of your description.

To refine the result, move to the Properties panel on the right. Here you can adjust motion speed to control energy, intensity to strengthen the effect, and motion flow to guide the direction of movement.

SoftBank agrees to acquire NYSE-listed DigitalBridge, a PE firm that invests in data centers and digital infrastructure operators, for $4B, including debt

Z AI, a company behind GLM model family, is going for an IPO on Jan 8 and set to raise $560 million.

Notion is working on AI-first Workspaces to enable users to build AI-powered organisations. This feature is expected to arrive soon in Early Access.

Cursor CEO Michael Truell warns that “vibe coding” advanced projects may create “shaky foundations” and eventually “things start to kind of crumble”.

🎨GPT Image 1.5: OpenAI’s latest, faster and more controllable image model

🧠 Oboe: Instantly create AI-powered learning courses

🤖 Nemotron 3: Nvidia’s open-source models for building multi-agent systems

🤝 Lumro: Deploy AI agents for top-notch customer experience

THAT’S IT FOR TODAY

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better!

See you tomorrow :)

- Dr. Alvaro Cintas