- Simplifying AI

- Posts

- 👀 Meta to Use Your AI Chats for Ads

👀 Meta to Use Your AI Chats for Ads

PLUS: How to Turn Text Prompts into Stunning, Realistic Videos Instantly with Wan 2.5

Good morning, AI enthusiast. Meta is turning your AI chats into a goldmine, literally. Your conversations might soon help decide which ads pop up on Facebook and Instagram.

In today’s AI newsletter:

Meta to target ads using AI chat data

Elon Musk unveils Grokipedia

Apple Shifts Focus to AI-Powered Smart Glasses

How to turn text and images into videos with WAN 2.5

AI NEWS

Meta announced that data from user interactions with its AI products will soon be used to serve personalized ads across its platforms. The company will update its privacy policy by December 16, affecting users globally, except in South Korea, the UK, and the EU.

→ AI chats will enhance user profiles for more accurate ad targeting

→ Applies to Meta AI, Ray-Ban Meta smart glasses, Vibes (AI video feed), and Imagine (AI image generation)

→ Sensitive topics like religion, politics, health, and sexual orientation won’t influence ads

→ Only impacts users logged into the same account across Meta products

Why is important: Meta is showing how free AI tools come with hidden costs. By monetizing AI interactions, the company is turning conversations into advertising power, signaling a new era where your AI activity directly feeds Big Tech’s ad engine.

AI NEWS

Elon Musk announced that his company xAI is building Grokipedia, a new AI-driven knowledge platform powered by Grok, xAI’s conversational AI. Musk promises it will correct errors, provide missing context, and overcome the biases he claims plague Wikipedia.

→ Grokipedia will pull from public data, including Wikipedia

→ Users can contribute to an open-source knowledge repository

→ xAI aims to make the platform publicly accessible with no usage limits

→ Critics warn of algorithmic bias, information manipulation, and centralized control

Why is important: Musk wants to reshape how we access and trust knowledge online. If executed well, Grokipedia could challenge Wikipedia’s dominance; if not, it risks replicating the same problems it seeks to fix. The tension between knowledge, power, and trust will define whether this project is revolutionary or just another Musk side venture.

AI HARDWARE

Apple has shelved plans for a lighter, cheaper Vision Pro overhaul to prioritize AI-enabled smart glasses that could rival Meta’s Ray-Ban Display. The company is working on at least two models:

→ N50: Pairs with iPhone, no display, voice-driven AI interactions

→ Display-equipped glasses: Compete with Meta’s Ray-Ban Display, feature cameras, speakers, and AI-powered voice controls

→ Varied styles, new chip, and potential health-tracking features

→ Development accelerated after Vision Pro’s high cost, weight, and limited apps slowed adoption

Why is important: Apple sees AI-driven glasses as the next frontier beyond VR/AR headsets. By focusing on voice interaction and AI, the company hopes to leapfrog its Vision Pro missteps and compete with Meta’s growing presence in consumer smart glasses.

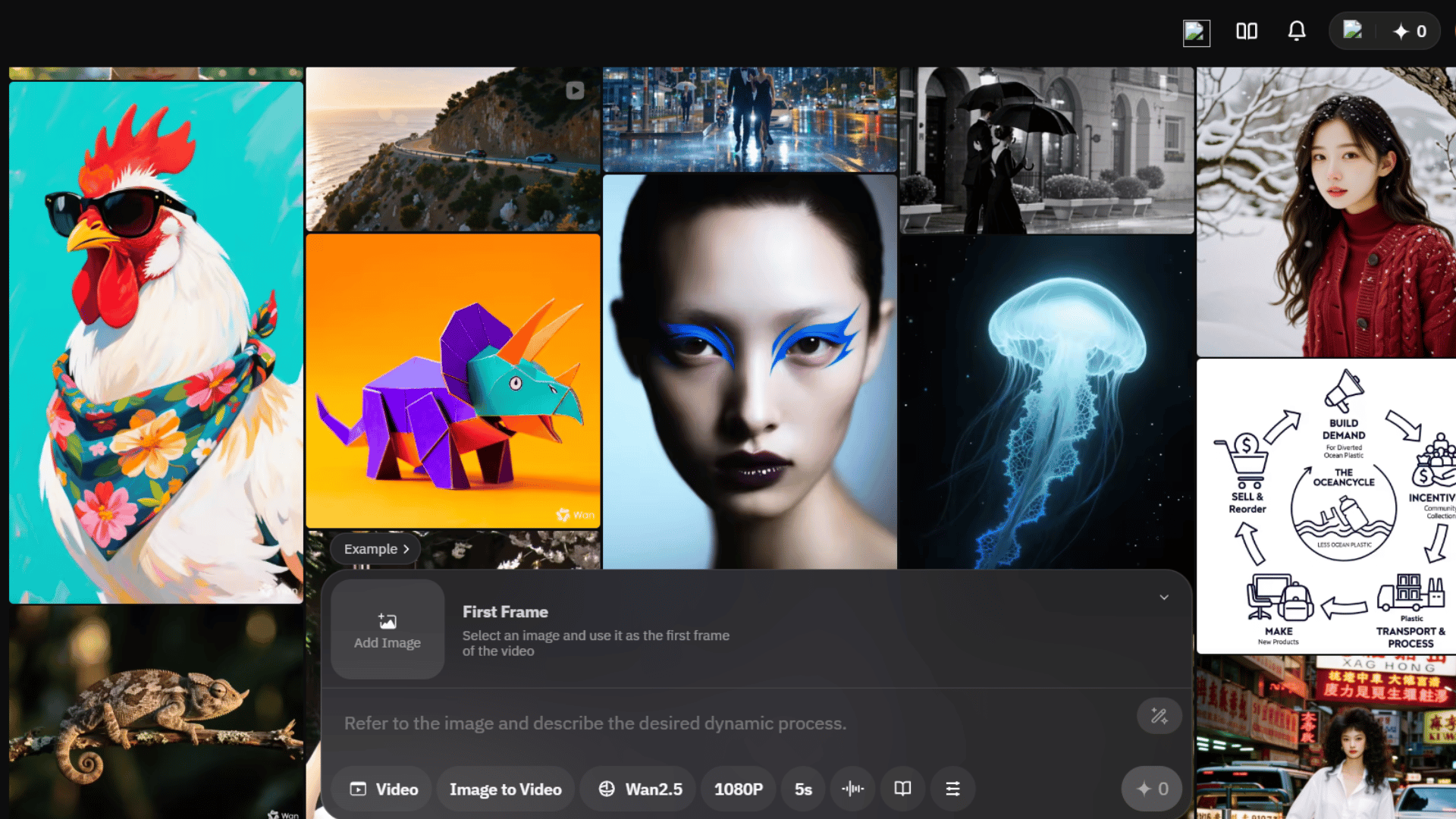

HOW TO AI

How to Turn Text & Images Prompts into Videos

WAN 2.5 enables ultra-fast video generation with AI. From cinematic storytelling to product commercials, game scenes, and realistic character animations, WAN 2.5 condenses workflows that once required entire studios into a few prompts and clicks.

🧰 Who is This For

Creators who want fast AI-generated videos

Startups and marketers making product demos or ads

Students working on creative projects

Anyone curious about turning imagination into visuals instantly

STEP 1: Access Wan 2.5

Head over to wan.video. You’ll get your first generation for free, and after that, you will need to upgrade your plan to continue creating videos.

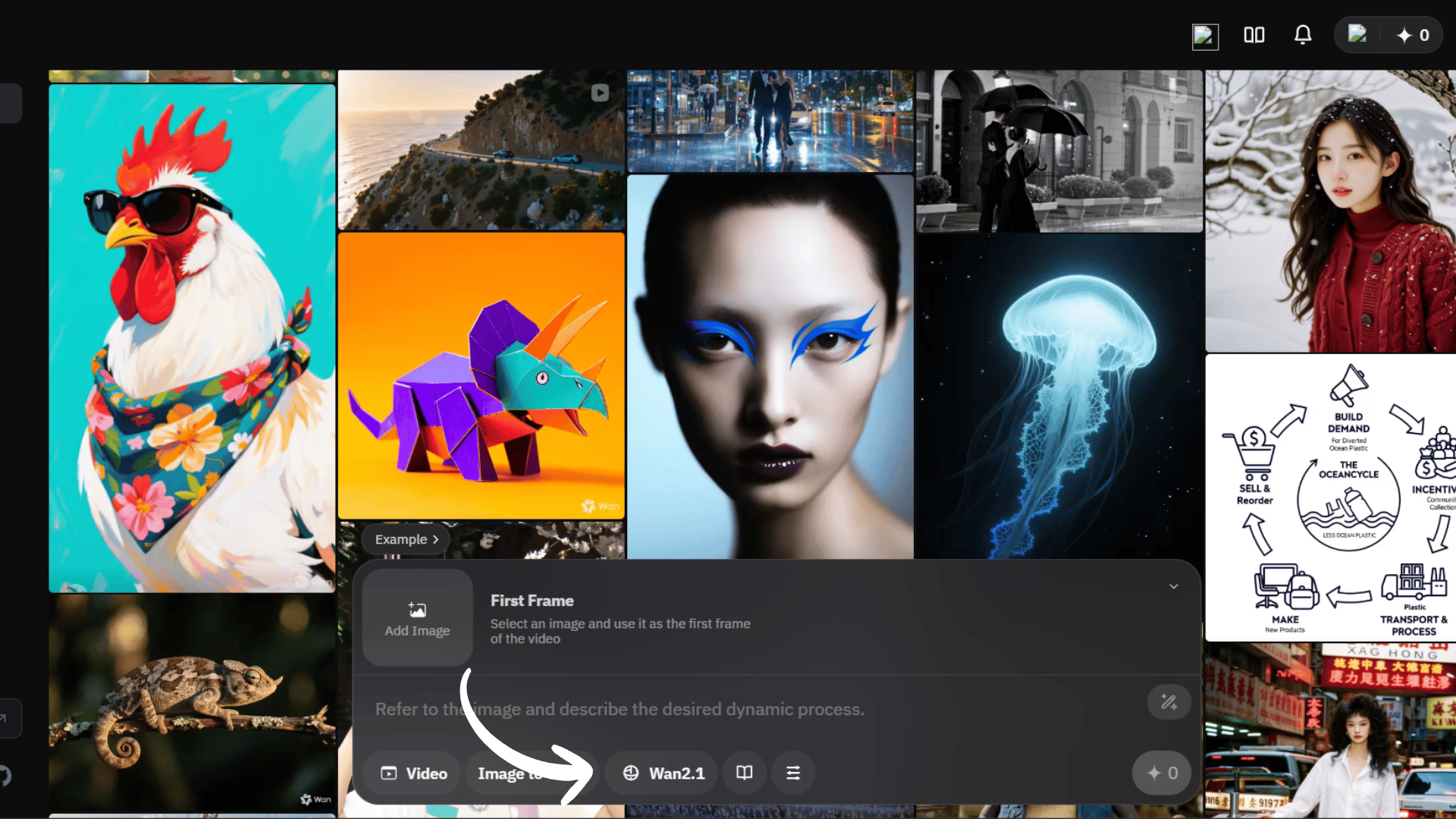

Once logged in, you’ll land on a clean, ChatGPT-like interface. At the bottom of the screen, you’ll see the main text box where you enter prompts.

Above it, you’ll find options for uploading an image, selecting resolution, choosing the model, setting the duration, and picking the media type.

STEP 2: Configure Your Settings

For this tutorial, make sure the Text-to-Video option is selected.

The model will default to WAN 2.5, which produces the highest quality results. Set your desired resolution and duration and make sure the media type is set correctly for your video.

STEP 3: Write Your Prompt and Generate

Now you’re ready to create your video. In the text box, type the instructions for what you want the AI to do.

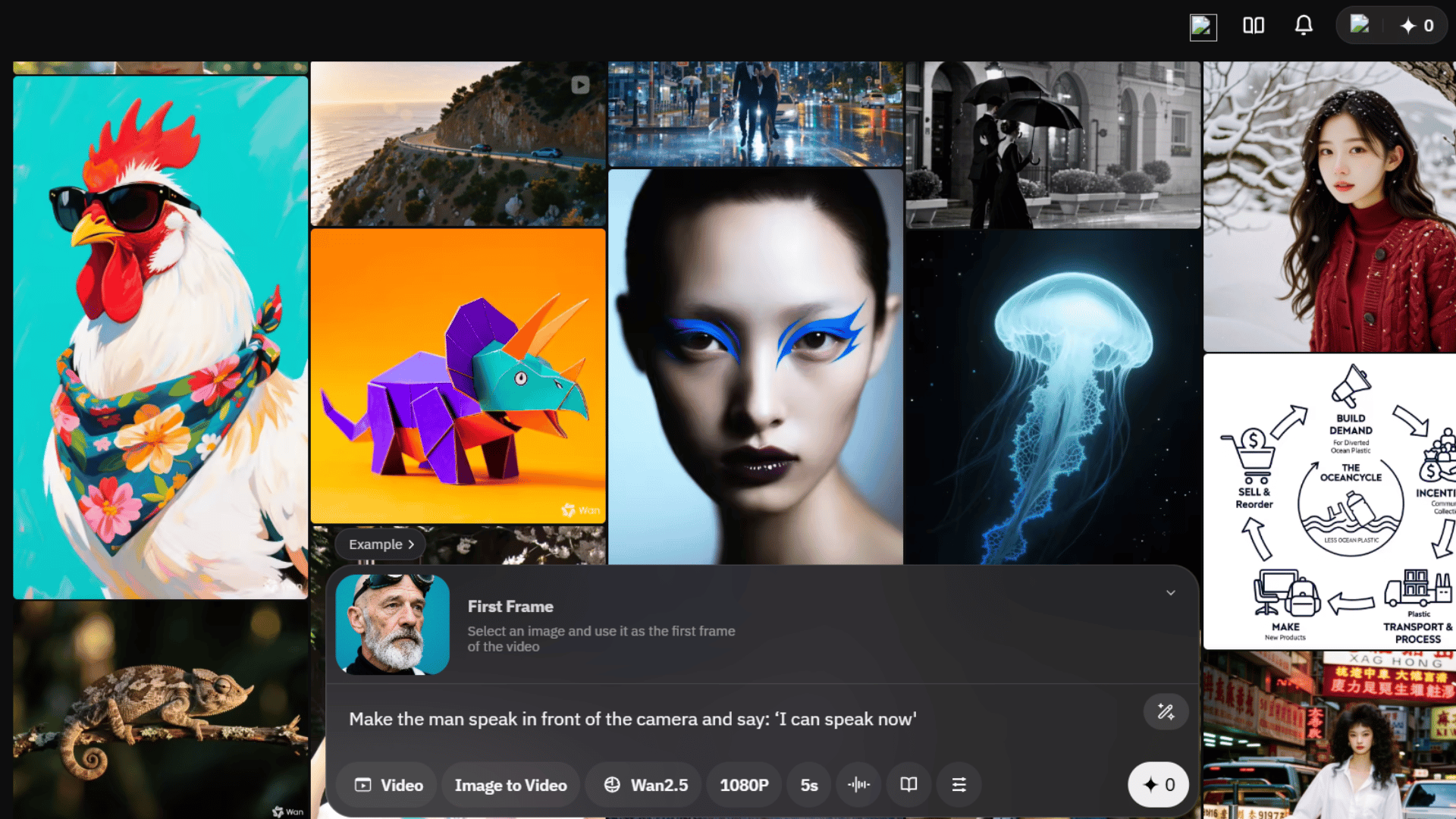

For example, I upload an image of a man and type: “Make the man speak in front of the camera and say: ‘I can speak now.’”

Click Submit and WAN will start processing. Within under a minute, your video will be ready to view and download.

BONUS

Since WAN 2.5 is no longer free, you can use WAN 2.1 to generate videos without upgrading. Simply switch the model from WAN 2.5 to WAN 2.1 in the dropdown menu before submitting your prompt. While the quality is slightly different, the results are very similar and you can continue experimenting or creating videos without any cost.

ESSENTIAL BITES

OpenAI valued at $500B, Oct. 2. Employees sold $6.6B in shares to investors including SoftBank, boosting the company from a $300B valuation.

Character AI removes Disney characters, Elsa, Moana, Spider-Man, and Darth Vader pulled after a Disney cease-and-desist.

Thinking Machines Lab, led by ex-OpenAI CTO Mira Murati, launched Tinker, an API to customize AI models like Llama and Qwen. Free early access.

California passed SB 53, a first-of-its-kind AI safety law requiring transparency and safety protocols from large AI labs, showing regulation can coexist with innovation.

Russell Westbrook co-founded Eazewell, an AI platform that helps families handle end-of-life planning and digital accounts, easing the burden after a loved one’s death.

Google teases Gemini-powered Google Home, Spring 2026, $99 smart speaker with 360° audio, surround-sound pairing, and Gemini AI assistant, launching in multiple countries with eco-friendly design.

Hot AI Tools

That's it! See you tomorrow

- Dr. Alvaro Cintas