- Simplifying AI

- Posts

- 🧑💻 GPT-5.2-Codex just dropped

🧑💻 GPT-5.2-Codex just dropped

PLUS: How to use MCPs to turn your AI Into a real working assistant

Good morning! OpenAI just released GPT-5.2-Codex, its most advanced agentic coding model yet, built for long-horizon software engineering and large-scale codebases.

In today’s AI newsletter:

GPT-5.2-Codex is here

OpenAI partners with U.S. department of energy

Google releases rwo “Pocket Rocket” AI models

How to use MCPs to turn your AI Into a real working assistant

4 new AI tools worth trying

AI NEWS

GPT-5.2-Codex is designed to handle complex, real-world engineering tasks with stronger reasoning, better context handling, and improved system reliability.

→ Tops SWE-Bench Pro with 56.4% accuracy, best-in-class for coding models

→ Native context compaction enables long, multi-step refactors without losing track

→ Stronger vision lets it read screenshots, diagrams, and UI mockups into working code

→ Improved Windows performance and better AI-assisted vulnerability detection

Coding models are shifting from “code helpers” to autonomous engineering agents. With long-horizon reasoning, repo-wide refactoring, and security awareness, GPT-5.2-Codex pushes AI closer to handling serious production engineering,not just writing snippets.

AI PARTNERSHIPS

OpenAI and the DOE signed a memorandum of understanding to collaborate on AI and advanced computing, supporting the DOE’s Genesis Mission to accelerate scientific discovery.

Frontier AI models will be explored across DOE initiatives like fusion energy and advanced science

Builds on existing deployments at national labs such as Los Alamos, Livermore, and Sandia

Includes sharing expertise, coordinating research, and testing AI in real lab environments

Supports OpenAI for Science’s goal of speeding up hypothesis testing and discovery

This moves AI from theory into national-scale science infrastructure. By pairing frontier models with supercomputers and top researchers, AI could become a core scientific instrument, cutting years off discovery timelines in energy, physics, and bioscience.

AI MODELS

Google open-sourced T5Gemma 2 and FunctionGemma, ultra-efficient 270M-parameter models built for edge devices like phones and browsers.

T5Gemma 2 revives the encoder–decoder architecture for better reasoning, long context, and multimodal tasks

FunctionGemma is optimized for agents and function calling, less chatting, more doing

Both outperform same-size SOTA models despite being tiny

Designed to run efficiently on mobile and low-compute environments

This is Google betting on small, fast, useful AI over just bigger models. By making powerful agent-ready models run locally, Google is pushing AI closer to users, unlocking real-time actions, fewer hallucinations, and smarter assistants directly on your device.

HOW TO AI

🗂️ How to Use MCPs to Turn Your AI Into a Real Working Assistant

In this tutorial, you’ll learn how to connect MCPs (Model Context Protocols) so your AI can browse the web, build UI components, and read up-to-date documentation instead of guessing.

🧰 Who is This For

Developers who want AI to actually interact with tools

Founders and builders testing apps or automating tasks

Creators using Cursor or Claude Code

Beginners who want powerful AI without complex setup

What are MCPs ?

MCPs, short for Model Context Protocols, are what let AI go beyond chatting. Once an MCP is connected, the AI can interact with real tools like your browser, documentation, or UI libraries. Instead of guessing, it can see what’s on screen, read real docs, and take real actions.

MCP 1: 21st Dev (Magic MCP)

Start by creating an account on 21st.dev. Once you’re logged in, head to the console where you’ll see an option to create an API key. Click that, and you’ll be shown a command that already includes your key. Copy that command.

Now open Cursor and open the built-in terminal. Paste the command you just copied and press Enter. This will automatically set up the Magic MCP server inside Cursor. When it finishes, open Cursor settings, go to the MCP section, and you should see Magic MCP listed there. If it shows red, just click refresh and it should turn green.

To use it, open any file in your project and type /ui create a button component. Press Enter and wait a moment. A small popup will appear showing UI options from the 21st.dev library. Click the one you like. If Cursor asks you to run an install command, click run. The component is now added directly to your project and ready to use.

MCP 2: Browser MCP

Open Google Chrome and search for “Browser MCP.” Install the Chrome extension from the official page. Once installed, click the extension and it will open the documentation page automatically.

On that page, you’ll see instructions for setting up the MCP server. Copy the configuration shown there. Now switch back to Cursor, open Settings, go to the MCP section, paste the configuration, and save it. If needed, click refresh.

Go back to Chrome, pin the Browser MCP extension so it’s easy to access, then click it and press Connect. You’ll see a message saying the Browser MCP can now automate this browser.

To try it, go to Cursor and type something like, “Using Browser MCP, go to localhost:3000/onboarding and check the flow.” Press Enter and watch your browser. Pages will open, buttons will click, and the flow will run automatically using your real logged-in session.

This is especially useful for testing apps or automating tasks because it behaves like a real human using the browser.

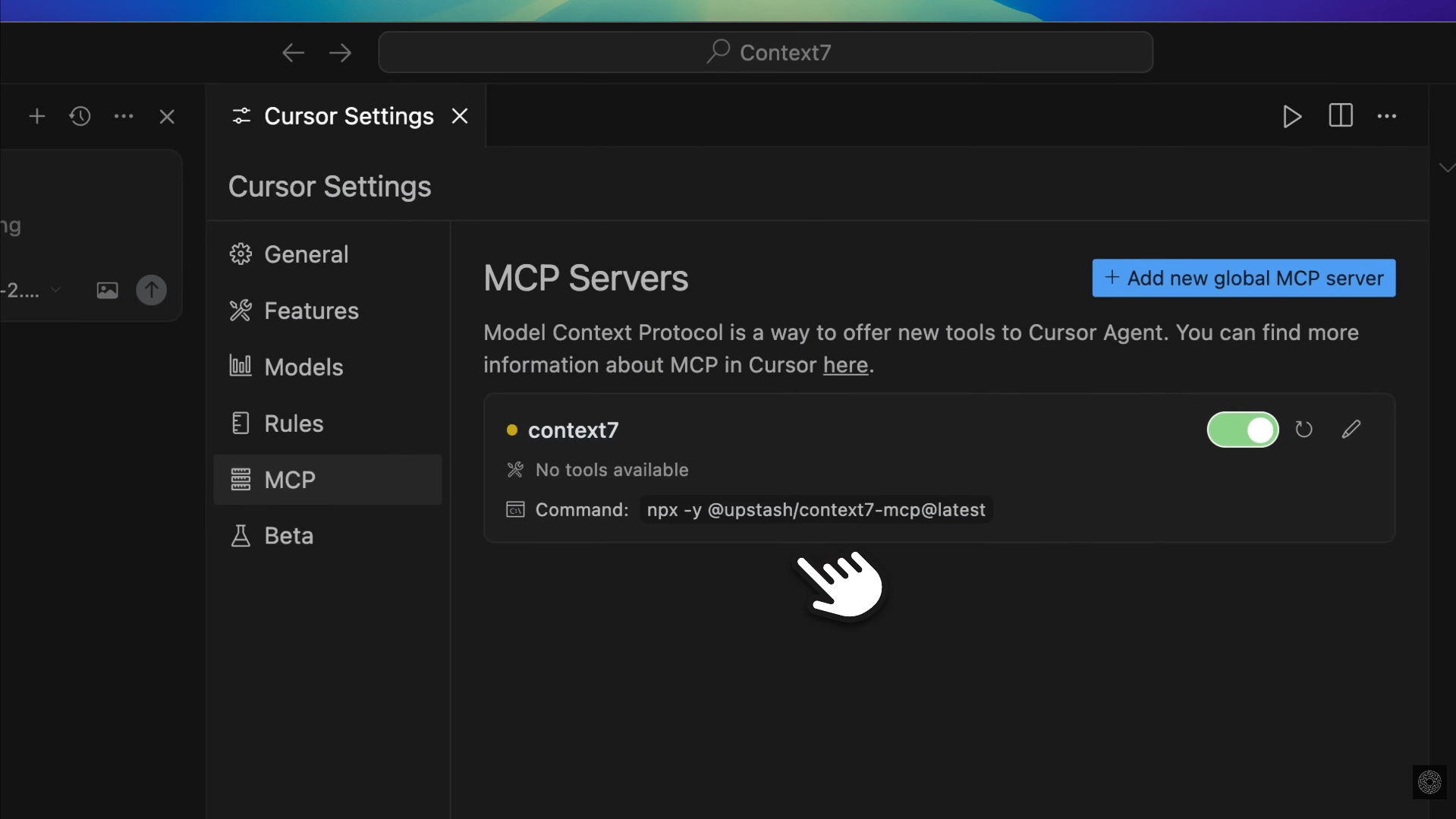

MCP 3: Context7

Context7 fixes a common problem where AI uses outdated documentation. Open context7.com and scroll to the installation section. Copy the MCP setup configuration provided there.

Open Cursor, go to Settings, navigate to MCP, add a new MCP, paste the configuration, and save it. If the tools don’t appear right away, click refresh or restart Cursor.

Once connected, you can ask the AI to use Context7 for accurate coding. For example, you can say, “Using Context7, build an MCP agent using MCPUs.” The AI will pull exact documentation, use correct imports, and avoid broken or outdated commands.

This is especially helpful in larger projects where wrong context can easily break things.

Pennsylvania's Supreme Court rules that police can get Google search data without a warrant; an expert warns it may encourage warrantless searches nationwide.

Meta is developing a new image and video-focused AI model codenamed Mango, expected to be released in H1 2026 along with its new LLM dubbed Avocado.

Google is working on a new initiative to make its AI chips run PyTorch better and is working closely with Meta, as the two discuss Meta using more TPUs.

Samsung unveils the Exynos 2600, the world's first smartphone SoC built on a 2nm Gate-All-Around process, expected to power some Galaxy S26 and S26 Plus models

⚡GPT-5.2-Codex: Built for long-horizon coding and large repos

🖼️ GPT Image 1.5: OpenAI’s latest, faster and more controllable image model

⚡ Gemini 3 Flash: Google’s fast, default AI model built for scale and everyday tasks

🤖 Nemotron 3: Nvidia’s open-source models for building multi-agent systems

THAT’S IT FOR TODAY

Thanks for making it to the end! I put my heart into every email I send, I hope you are enjoying it. Let me know your thoughts so I can make the next one even better!

See you tomorrow :)

- Dr. Alvaro Cintas